Review on the New Google Update – Big Daddy - New year special Bigdaddy

Review of New Google Update

Big Daddy:

Matt Google's senior engineer has asked for feedback on the new results which were live on test datacenters 64.233.179.104 , 64.233.179.99 etc,

Matt cutts has asked semiofficially for feedback to their new index, Currently the new results are live on http://66.249.93.104 , http://66.249.93.99 etc, the 66.249 range is steady and is showing new results from 1st of January 2006, Even as matt said 64.233.179.104 will also be the test DC we don't see that datacenter to be a steady one, Test results come and go in that particular DC,

Seeing the results on the test DC we see many issues fixed ,

1. Better Logical arrangement of sites in site: and domain only search,

Whether deliberately or by mistake google was unable to logically arrange site: search and domain only search, Homepage has been the most important page for many sites and google buried homepage when doing site:domain.com search or through domain only search, Now in the new datacenter we see a better logical arrangement of sites and pages in sites are listed in proper order compared to other Datacenters, Yahoo has been excellent in arranging site: search for a site, they show the best URL arrangement, Yahoo still shows best arrangement of pages for a site: search, Google has been known to hide many good information from end users, especially the link: search for a site, Google purposely shows less than 5% of links through link: search confusing many webmasters, so probably they do the same with site: search but atleast they have a fix for the homepage in the New big daddy datacenters'

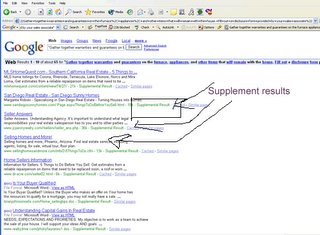

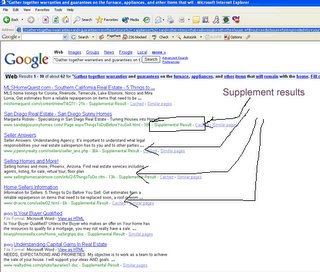

2. Removal of URL only entries / URL only supplemental.

For a very long time Google has been showing URL only listing in SERPs( search engine result pages ) for some sites, Most possibly reasons for URL only listings / supplement results are duplicate pages across a site or across sites, pages which doesn't have any link to it, Pages which are once crawl able but later not crawl able, pages which no longer exists, pages which were not crawled by google for a very long time possibly because of some sort of automated penalty, pages which was once crawled well but due to some web host problems is not crawlable by search engines, redirect URLs etc, It seems Google has fixed this major issue, URL only listings don't add value to google's index and good that it has been removed from the search results, Though we see some supplement pages hanging around here and there most of those pages seem to be caused by duplicate content across sites,

Recently google is very severe on duplicate contents especially syndicating articles, if there are lot of copies of same articles published across sites google makes some of the duplicate copies supplement results, Only these results seem to stick in the new DC other than that no supplement results are found,

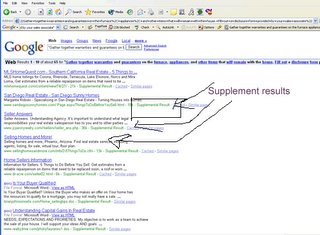

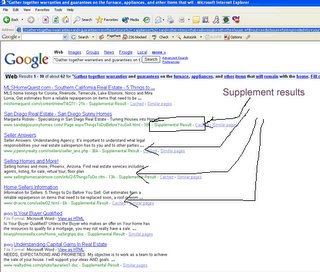

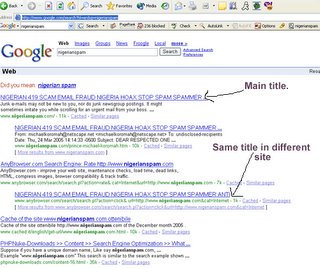

For example this is the result of the main www.google.com DC

Result in updated Datacenter New DC: 66.249.93.104 ( Verified )

Good indexing of pages for a site:

Recently google has been showing vague page counts for sites they indexed, For one site we work google has been showing 16,000 pages indexed where as the site itself doesnt have more than 1000 pages, But the new DC shows only 550 pages indexed which is a good sign, And all the 550 pages are unique pages without supplementals and we can expect them to rank soon, this is a very good improvement, we have seen the same accuracy across many sites we monitor, the new DC gives much better page counts,

Better URL canonicalization:

We see big improvement of google's understanding of the www and non-www version, we have checked it across a lot of sites, for example for the search dmoz.org and www.dmoz.org google lists only one version of the site that is dmoz.org, before they show one version URL only and the other one real since it causes a lot of duplicate issues, we see the same fix across sites we monitor, Most of the results are now very good due to google handling redirects pretty well, that is a very good sign for a lot of sites, best advantage are IIS hosted sites which don't have .htaccess site, lots of sites were unable to to 301 redirect to any one version of the URL, either www or non-www now they don't have to do that google can understand that both URLs are same for a site,

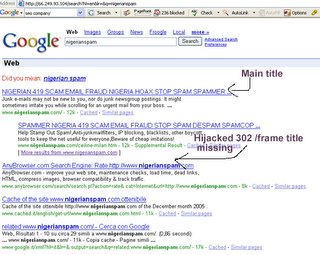

Better handling of 302 redirects:

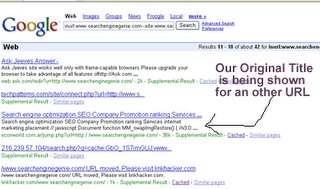

the new datacenter is doing well in handling sneaky 302 redirects which used to confuse googlebot a lot, there has been numorous discussions on this 302 redirect issue, google has been closely monitoring this problem and finally they came out with a good fix in the new big daddy update DCs,

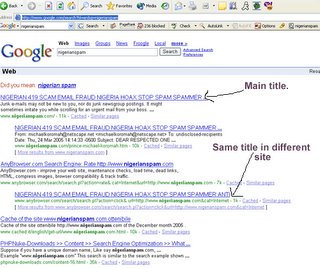

For example check here,

https://www.google.com/search?hl=en&q=nigerianspam

you can see the page of anybrowser.com having the same title as nigerianspam homepage

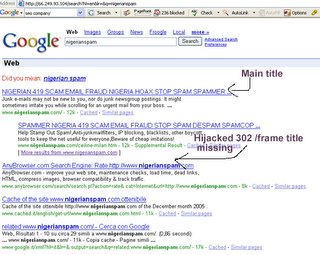

http://66.249.93.104/search?hl=en&lr=&q=nigerianspam&btnG=Search

you can see the page of anybrowser.com having the same title as nigerianspam homepage is removed / missing

Pagerank of canonical problem URLs not fixed,

Google has not fixed the pagerank of sites with canonicalization, Hope it will be fixed in the coming dates,

some jump scripts which uses 302 redirects are not fixed,

hope it will improve soon, example:

http://66.249.93.104/search?q=inurl:www.searchenginegenie.com+-site:www.searchenginegenie.com&hl=en&lr=&start=10&sa=N

Search Relevancy not accurate both in current results and new DC,

When we searched for DVD ( digital versatile disc ) in google we found google.co.uk ( google's UK regional domain ) ranking in top 5, that is too bad google doesnt have anything to do with DVD, we hope it will be fixed soon,

https://www.google.com/search?hl=en&lr=&q=dvd

http://66.249.93.104/search?hl=en&lr=&q=dvd

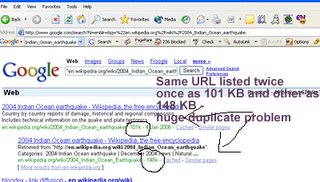

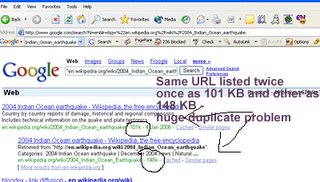

Possible duplication caused by new improved page indexing by google,

Google used to have 101 kb indexing limit for a page, now they relaxed that limit and they can crawl more than 500 kb, But it seems the 101 kb limitation index is still available and is visible often is search, we see it across our client sites, here is an example on wikepedia page:

https://www.google.com/search?hl=en&lr=&q=%22en.wikipedia.org%2Fwiki%2F2004_Indian_Ocean_earthquake

Not fixed in new datacenter too,

http://66.249.93.104/search?hl=en&lr=&q=%22en.wikipedia.org%2Fwiki%2F2004_Indian_Ocean_earthquake

That is all for review now more review will follow soon,

SEO Blog Team,

Big Daddy:

Matt Google's senior engineer has asked for feedback on the new results which were live on test datacenters 64.233.179.104 , 64.233.179.99 etc,

Matt cutts has asked semiofficially for feedback to their new index, Currently the new results are live on http://66.249.93.104 , http://66.249.93.99 etc, the 66.249 range is steady and is showing new results from 1st of January 2006, Even as matt said 64.233.179.104 will also be the test DC we don't see that datacenter to be a steady one, Test results come and go in that particular DC,

Seeing the results on the test DC we see many issues fixed ,

1. Better Logical arrangement of sites in site: and domain only search,

Whether deliberately or by mistake google was unable to logically arrange site: search and domain only search, Homepage has been the most important page for many sites and google buried homepage when doing site:domain.com search or through domain only search, Now in the new datacenter we see a better logical arrangement of sites and pages in sites are listed in proper order compared to other Datacenters, Yahoo has been excellent in arranging site: search for a site, they show the best URL arrangement, Yahoo still shows best arrangement of pages for a site: search, Google has been known to hide many good information from end users, especially the link: search for a site, Google purposely shows less than 5% of links through link: search confusing many webmasters, so probably they do the same with site: search but atleast they have a fix for the homepage in the New big daddy datacenters'

2. Removal of URL only entries / URL only supplemental.

For a very long time Google has been showing URL only listing in SERPs( search engine result pages ) for some sites, Most possibly reasons for URL only listings / supplement results are duplicate pages across a site or across sites, pages which doesn't have any link to it, Pages which are once crawl able but later not crawl able, pages which no longer exists, pages which were not crawled by google for a very long time possibly because of some sort of automated penalty, pages which was once crawled well but due to some web host problems is not crawlable by search engines, redirect URLs etc, It seems Google has fixed this major issue, URL only listings don't add value to google's index and good that it has been removed from the search results, Though we see some supplement pages hanging around here and there most of those pages seem to be caused by duplicate content across sites,

Recently google is very severe on duplicate contents especially syndicating articles, if there are lot of copies of same articles published across sites google makes some of the duplicate copies supplement results, Only these results seem to stick in the new DC other than that no supplement results are found,

For example this is the result of the main www.google.com DC

Result in updated Datacenter New DC: 66.249.93.104 ( Verified )

Good indexing of pages for a site:

Recently google has been showing vague page counts for sites they indexed, For one site we work google has been showing 16,000 pages indexed where as the site itself doesnt have more than 1000 pages, But the new DC shows only 550 pages indexed which is a good sign, And all the 550 pages are unique pages without supplementals and we can expect them to rank soon, this is a very good improvement, we have seen the same accuracy across many sites we monitor, the new DC gives much better page counts,

Better URL canonicalization:

We see big improvement of google's understanding of the www and non-www version, we have checked it across a lot of sites, for example for the search dmoz.org and www.dmoz.org google lists only one version of the site that is dmoz.org, before they show one version URL only and the other one real since it causes a lot of duplicate issues, we see the same fix across sites we monitor, Most of the results are now very good due to google handling redirects pretty well, that is a very good sign for a lot of sites, best advantage are IIS hosted sites which don't have .htaccess site, lots of sites were unable to to 301 redirect to any one version of the URL, either www or non-www now they don't have to do that google can understand that both URLs are same for a site,

Better handling of 302 redirects:

the new datacenter is doing well in handling sneaky 302 redirects which used to confuse googlebot a lot, there has been numorous discussions on this 302 redirect issue, google has been closely monitoring this problem and finally they came out with a good fix in the new big daddy update DCs,

For example check here,

https://www.google.com/search?hl=en&q=nigerianspam

you can see the page of anybrowser.com having the same title as nigerianspam homepage

http://66.249.93.104/search?hl=en&lr=&q=nigerianspam&btnG=Search

you can see the page of anybrowser.com having the same title as nigerianspam homepage is removed / missing

Pagerank of canonical problem URLs not fixed,

Google has not fixed the pagerank of sites with canonicalization, Hope it will be fixed in the coming dates,

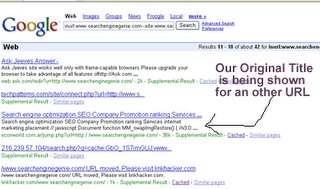

some jump scripts which uses 302 redirects are not fixed,

hope it will improve soon, example:

http://66.249.93.104/search?q=inurl:www.searchenginegenie.com+-site:www.searchenginegenie.com&hl=en&lr=&start=10&sa=N

Search Relevancy not accurate both in current results and new DC,

When we searched for DVD ( digital versatile disc ) in google we found google.co.uk ( google's UK regional domain ) ranking in top 5, that is too bad google doesnt have anything to do with DVD, we hope it will be fixed soon,

https://www.google.com/search?hl=en&lr=&q=dvd

http://66.249.93.104/search?hl=en&lr=&q=dvd

Possible duplication caused by new improved page indexing by google,

Google used to have 101 kb indexing limit for a page, now they relaxed that limit and they can crawl more than 500 kb, But it seems the 101 kb limitation index is still available and is visible often is search, we see it across our client sites, here is an example on wikepedia page:

https://www.google.com/search?hl=en&lr=&q=%22en.wikipedia.org%2Fwiki%2F2004_Indian_Ocean_earthquake

Not fixed in new datacenter too,

http://66.249.93.104/search?hl=en&lr=&q=%22en.wikipedia.org%2Fwiki%2F2004_Indian_Ocean_earthquake

That is all for review now more review will follow soon,

SEO Blog Team,

1 Comments:

Interesting post in matt's blog, so what do you say about this,

"Hi Matt,

I couldn't avoid noticing that the search interface powered by the Bigdaddy centre bears the name of the language (English) instead of the name of the country. Is this how Google in the States looks like? Or is it a global Google roll-out more linguistically targeted?

Talking about language, I would like to share a couple of things about the linguistic relevance of search results in Google outside English-speaking countries, something that has been a bit of a thorn on the side for a majority of ordinary users who do not speak or understand languages other than their own. Just to clarify what I mean by language and countries please bear in mind, that as a Brazilian, I am talking about Portuguese and Spanish in Latin-America.

In order to make it clearer for everyone to visualise the issues, I would like to share a few statistical data collected by appointment of the Brazilian Government last year on Internet usage and penetration in Brazil (http://www.nic.br/indicadores/usuarios/index.htm). I believe we can assume the situation in other Spanish-speaking countries in the South-American subcontinent are similar.

As expected, the number of users in Brazil is small, counting with approximately 12 million people connected to the Internet out of a population of 185 million. According to the Brazilian Internet census, from this total just 20,81%, or 2.4 million people, use the Internet to search for information and online services. The most interesting fact about these numbers is that the total number of users connected to the Internet is in their majority from classes A and B, which are the most proficient and resourceful in the use of Internet.

The Language Issue

=================

I believe one of the reasons for such a small adherence to pivotal services such as search engines might be rooted on the fact that those services are still to offer the same ease of use in UI and quality in search results without much linguistic interference from other languages.

Our search settings are set by default to serve results from the web. This option yields results in any language.

Although, terms such as 'acarajé' (an Afro-Brazilian bean fritter) are easier to find in pure Portuguese, results get more confusing when one is researching for scientific terms; terms that are orthographically similar in several Latin languages such as 'politica'; or, international names of places and celebrities, just to mention a few.

Even when our users select "results in Portuguese" for a term such as 'florida' (full of flowers, in Portuguese and Spanish) in google.com.br, that does not guarantee they will get results in the language they had to make the extra effort to select: their own.

We feel that not only there is a lack of linguistic consistency in the results served but also what seems to be a not very well contextualised or culturally usable approach of search services inside the Portuguese and Spanish-speaking world at least.

The Florida example

===================

A search for 'florida' in Google.com.br with 'results in Portuguese' enabled made on 05 January 2006 yielded the following in the first page:

- third result, vernacular Latin;

- fourth result, vernacular Latin with a few English words at the bottom of a document from the Netherlands.

Just a couple of weeks before, the same search with the same settings brought a couple of English results just after the ones in Latin.

If you make the same search you will notice that there are a few other results with descriptions in English. In fact, those pages are in Portuguese and despite the bad linguistic accessibility they were not taken into account as foreign results in a Portuguese-only search in my personal research.

As known, from a SEO point of view, description meta-tags have little or no influence on the major SE's. However, they are the first point of information for users whenever search engines such as Google decide not to display excerpts of the text surrounding the keywords requested.

From a human point of view, despite the fact most of the blame goes to the web content producer who wrote the meta-description and the content itself, search engines could do better in not letting a whole meta-description in a language other than the one selected by the user slip or be displayed in the SERP's. As you know and might have said before, the description might not be important for the SE robot but it is for the user.

The language changes, the principle stays

====================================

Living in the UK and being able to communicate fairly well in English, I find it admirable to live immersed in a culture where all the information is readily available in the local language: from the technical books that help you as a professional, to the gadgets and their manuals to even salsa classes: everything is linguistically ready for consumption. You guys got it right: serve it in simple and clear English and you make it psychologically accessible for the consumer's desire.

My suggestion is that Google's default language results in country pages should be in that country's language(s). It is not only usable and accessible (both socially, culturally and interface-wise) but in a context such as the Brazilian, would give a hand to local developers trying to show the other 80% non-searching users in Brazil that searching for information in a 'national' SE brings the results they are expecting without any hiccups and hindrances. Most of all, it would help to uphold the Internet as a democratic resource that caters for all equally.

In a sense, the way it is now is like watching a subtitled film, either you concentrate on the dialogue or watch the action. Even those commanding the art of reading and watching a film at the same time do not deny it is much better to watch motion pictures in their own language.

The "Pages from Brazil option" issue

===============================

Unlike the UK and other countries that liberated the purchase of national domains to anyone, since the beginning the Brazilian government decided to reserve the .com.br and .net.br domains strictly to companies with a registered office in Brazil. Contrary to the disputable gain in creating a market free of speculation, this has created a trend amongst Brazilian web developers and designers to open their websites with .com or .net domains instead.

On top of that, a considerable number of the most affordable hosting companies in Brazil are actually merchant customers of hosting companies in the US. The same happens in other countries as well, even in the UK where thousands of .co.uk are believed to be hosted in much more affordable American hosting companies.

I believe the way Search Engines, including Google, have been positioning content as pertaining to a certain country according to the domain and where it is hosted is missing a trend that is going completely to the other direction.

Yes, it is not Google's fault that the academics at Fapesp (the institution that used to take care of the .br domains) have dictated that, if one is not an institution or a liberal professional registered in an official professional association, all that is left is the dubious .nom.br domain.

SEs could improve their localised SERPs tenfold if they considered that certain national domains are hard to acquire and that a significant amount of sites in the western world are hosted in the US either in paid host companies or in free host services such as blogger and geocities.

Possible workarounds for the 'pages from…' problem:

—————————————————————–

a. Extend the algorithm to check the content of the page (keywords, addresses, language, etc…) and interpret what country that page belongs to. It can be a bit complicated for multi-national sites hosting pages in several languages side by side, but if big multinational sites can, then the solution might already been in place somewhere just waiting to be rolled out across the globe.

b. Language differentiator chart (stemming). When I was a kid, my father, a Spanish immigrant, used to write down how to say certain words in several languages spoken in the Iberian Peninsula (Portugal and Spain). He would start with a word like 'action' then by using stemming he would progress from ação to acción then, acció, açon, etc…

Every Latin language has a set of characteristics that differentiate them from each other. I believe Google is already doing something in that sense as we can not see as many Spanish results in Portuguese-only searches as it used to be before.

c. Inbound and outbound links from a certain country. It could give a clue to bots of which country the .com or .net site belongs to.

d. Country opt-in via Google Sitemaps or other XML resources. Extending Google Sitemaps functionality to accommodate XML language/country declarations would be great and also help the algorithm to judge where the page is from.

e. XML namespaces in the HTML tag of the document.

f. Target country meta-tag. HP uses it already:

g. Country hint in the URL. In the same way SE algorithms read keywords in the URL, it could be helpful to extend the algorithm capability to analyse a .net or .com as pertaining to a country via the countries XML abbreviation in the URL. Example:

http:// www .foreignsite. net/br/index.html

h. DMOZ indication. Use of DMOZ's country categorisation as a validator of a site's origin.

None of the workarounds suggested above would in fact work alone (after all, we don't live in an ideal world where everyone says the truth in their meta-tags) but the group of them used together in different priorities and weights against indexed pages could help to decipher whether a .com or a .net is an American site, a site from any other country in Latin-America or a mail order company based in Jersey trying to expand its business to other countries by faking its origin.

In any case, what I would like to ask Google and other Search Engines is that a new way of looking into the linguistic challenges mentioned above be studied with sincerity and that you help us develop our small market by offering search results and search interfaces with language options that, by default, are relevant to our audiences in both sides of the digital divide.

We'll be looking forward to 'Bigdaddy' but we'll be more joyous when 'El Gran Papá' and 'O Paizão' come home.

Many thanks for your attention and looking forward to hearing more about what is shared above from you and your company.

"

Post a Comment

Links to this post:

Create a Link

<< SEO Blog Home