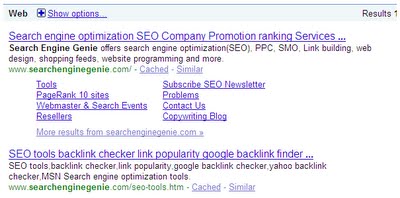

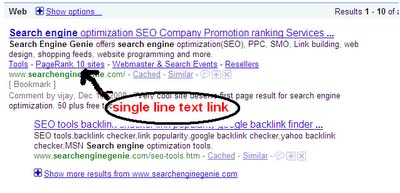

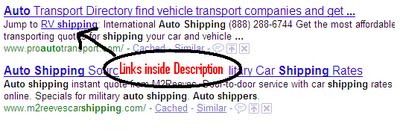

New Sitelink-type links with meta descriptions on some searches

Google has recently made a lot of changes to their site links. When site links ( links that appear below the site when the domain name or other major name that the domain represents appears ) came into existence people thought its a weird thing. But now Yahoo, Bing both uses them copied from Google. Its an interesting way for people to find pages to a site they get a chance of multiple pages to be clicked from the search results itself. I have personally used site-links for a long time. Every one out of 2 times i have clicked a site link than the primary result. So yes as an active internet user i use sitelinks which i feel is a big achievement from Google. A trial has worked for them and we usually see tracking URLs in Sitelinks so even Google is monitoring the clicks on sitelinks.

Today Google has improvised a lot when it comes site links. They came up with a site link similar to simple single line text links we see it visible these days. Take a look at the following example.

Great going Google keep it up.

Labels: link building, search engines

Age of domains - Importance in search engines:

I also feel that "stability" in the hosting network is another factor. I feel that anyone who has a serious Internet business is going to have their own IPs and possibly server(s) to do their thing. They won't be relying on a cheap web host to compete in a market that is reserved for the BIG BOYs. That's like showing up for a drag race in a Prius or something. :)

It is not the "only" factor though. Success takes time. There was a point in Internet history when it "could" happen overnight but that has slowly dwindled to lotto type statistics. You'll have to invest the time and patience knowing that what you are building is a long term proposition.

Sure, you can open your doors and have success overnight and it still happens. If you have the right product and/or service along with a few strategically placed marketing efforts, you can start the ball rolling. Nature will take its course from there. Yes, things will "naturally" happen as it goes viral. Unfortunately many of us may not reach that level and we'll continue to work in the trenches, feed our families, and make a decent living off the leftovers. :)

Yes, domain age is an important factor in "all" things Internet related. Each year that passes, the value of that domain increases. It's like fine wine. Since 1995 has a bit of meaning when talking Internet these days. Companies who have been online for any period of time would be wise to start advertising their since dates.

Labels: search engines

How to submit a re-inclusion request Google's official video - transcripted by SRequesting reconsideration in Google how to remove banned site – Video

Requesting reconsideration in Google how to remove banned site – Video transcript

Posted by Mariya Moeva, Search Quality Team

Hai I am Mariya Moeva from the Google Search Quality Team and I like to talk to you about reconsideration requests. In this video we will go over one how to submit a reconsideration request for your site. Lets take a webmaster lady here as an example. Ricky a hard working webmaster who works on his ancient politics blog everyday lets call it example.com one day he checks and sees that his site no longer appears in Google search results. Lets see some things to know whether he needs to submit reconsideration requests. First he needs to check whether his sites disappearance from the index may be caused by access issues. You can do that too by logging into your webmaster tools account on the overview page you will be able to see when was the last time Google-bot successfully accessed your webpage. Here you can also check whether there are any crawling errors for example if your server was busy or unavailable when we try to access your site you would get an URL unreachable message alternatively there will be URLs on your site blocked by your robots.txt file you can see this by URLs restricted by robots.txt.

If these URLs are not what you expected you can go to tools and select analyze robots.txt here you can see whether you robots.txt file is properly formatted and only blocking parts of your site that you don't want Google to crawl. If google has no problems accessing your site check to see if there is a message waiting for you in the message center of your webmaster tools account. This is the place where Google uses to communicate with you an put information to you on webmaster tools account in the sites that you manage. If we see that there is something wrong with your site we may send you a message there detailing things you need to fix to get back your site in compliance with Google webmaster guidelines. Ricky logs into his webmaster tools account and checked that there are no new messages. He doesn't find any messages if you don't find any message in the message center check to see if your site has been on is in violation on Google's webmaster guidelines you can find that in the help center under the topic creating a Google friendly site how to make my site perform best in Google. If you are not sure why Google is not including your site a great place to look for help is our Google webmaster help group there you will find many friendly and knowledgeable webmasters and Googler's who will be happy to look at your site and give suggestions on what you might need to fix,

You can find links to both the help center and the Google help group at Google.com/webmasters to get to the bottom of why his site has disappeared from the index Ricky opens the webmaster guidelines and starts reading. In quality guidelines we specifically mention completing avoiding hidden text or hidden links on the page. He remember that at one point he hired a friend named Liz who claimed to say he knows something about web design and that he can make the site rank better in Google. Then he scans his site completely and finds blocks of hidden text on footer of all his pages. IF your site is in violation of Google webmaster guidelines and if you think this might have affected the way your site is ranked in Google now will be a good time to submit a reconsideration request. But before you do that make changes to your site so that it falls between the Google

S webmaster guidelines. Ricky removed all the hidden text from his pages now he can go ahead and submit a request for reconsideration. Login to your webmaster tools account under tools click on request reconsideration and follow the steps make sure you explain what you did wrong with your site and what steps you have taken to fix it.

Once you have submitted a request you will receive a message from us in the message center confirming that we have received it. We will then review for compliance with the Google webmaster guidelines. So that's an overview of how to submit a reinclusion and reconsideration request. Thanks for watching and Good luck with web mastering and ranking.

Labels: Google, search engines

BOSS - Build your Own Search Service a step ahead from yahoo

A web services platform that allows developers and companies to create and launch web-scale search products by utilizing the same infrastructure and technology that powers Yahoo! Search. It is a new platform that offers programmatic access to the entire Yahoo! Search index via an API. It also allows developers to take advantage of Yahoo!'s production searchinfrastructure and technology, combine that with their own unique assets, and create their own search experiences.

SUMMARY:

* Ability to re-rank and blend results -- BOSS partners can re-rank search results as they see fit and blend Yahoo!'s results with proprietary and other web content in a single search experience.

* Total flexibility on presentation -- Freedom to present search results using any user interface paradigm, without Yahoo! branding or attribution requirements.

* BOSS Mashup Framework -- We're releasing a Python library and UI templates that allow developers to easily mashup BOSS search results with other public data sources

* Web, news and image search -- At launch, developers will have access to web, news and image search and we'll be adding more verticals soon.

* Unlimited queries -- There are no rate limits on the number of queries per day.

USE FOR ITS PARTNERS & YAHOO:Its open up to infrastructure and technology to developers, entrepreneurs and companies because boss believes that being open is core to Yahoo!'s future success, opening their network, opening their own search experience via SearchMonkey, and now opening their search infrastructure via BOSS. It will lead to innovation both on Yahoo! and powered by Yahoo!. For BOSS, there's a virtuous circle in which partners deliver innovative search experiences, and as they grow their audiences and usage we have more data that can be used to improve our own Yahoo!Second, we do see new revenue streams from BOSS. In the coming months, BOSS will be launching a monetization platform that will enable Yahoo! to expand its ad network and enable BOSS partners to jointly participate in the compelling economics of search.

ADVANTAGE FOR ITS USERS: BOSS will enable a range of fundamentally different search experiences. These new search products will provide valueto users along multiple dimensions, such as vertical specialization, new relevance indicators and ranking models, and innovative UI implementations.

FEW EXAMPLES OF WHATS POSSIBLE WITH BOSS:ME.DIUM-Medium is a start-up that's built an innovative collaborative browsing product used BOSS to build a web-scale searchengine that leverages its real-time surfing data. By combining the depth of the Yahoo! Search index with its insight into where users are browsing, Medium can provide its users with a unique buzz-based search experience.

HAKIA-Its a semantic search start-up, which is using BOSS to access the Yahoo! Search index and dramatically increase the speed with which it can semantically analyze the web. With BOSS providing this important infrastructure, Hakia is ableto deliver a language search experience that isn't available from any of the "big three" search providers or other semantic search engines.

DAYLIFE TO-GO-Its a new self-service, hosted publishing platform from Daylife. Anyone can use this platform to generate customizable pages and widgets. It uses the BOSS API platform to power its web search module.

CLUUZ-This is a next-generation search engine prototype, generates easier-to-understand search results through semantic cluster graphs, image extraction and tag clouds. The Cluuz analysis is performed in real-time on results returned from the BOSS API.

To learn more about BOSS and get started using the API, visit the Yahoo! Developer Network. BOSS is open to all. One can check out the documentation, get a BOSS app ID and start building the next generation of search!!

Labels: search engines, Yahoo

Google uses Search Logs effectively to combar Webspam

Matt Cutts Senior Software Engineer and Lead of Web Spam Team in Google recently made an interesting post on how Google effectively fights spam using Data collection.

Web Spam is the most annoying part of Internet today. Especially more than 85% people uses search engines to land on any site for the first time Search Engine Spam should be totally avoided when it comes to user search experience. Search engines have always had the taunting task of fighting web spam from the day they came into existence. Google is one of the search engines which used effective anti-web spam methods to combat search engine spam. This is one reason they are keeping their position on top of all search engines.

First time i have seen Google really acknowledge that they are using log data in their algorithm to combat spam. According to Official Google blog

"Data from search logs is one tool we use to fight web spam and return cleaner and more relevant results. Logs data such as IP address and cookie information make it possible to create and use metrics that measure the different aspects of our search quality (such as index size and coverage, results "freshness," and spam). Whenever we create a new metric, it's essential to be able to go over our logs data and compute new spam metrics using previous queries or results. We use our search logs to go "back in time" and see how well Google did on queries from months before. When we create a metric that measures a new type of spam more accurately, we not only start tracking our spam success going forward, but we also use logs data to see how we were doing on that type of spam in previous months and years.

The IP and cookie information is important for helping us apply this method only to searches that are from legitimate users as opposed to those that were generated by bots and other false searches. For example, if a bot sends the same queries to Google over and over again, those queries should really be discarded before we measure how much spam our users see. All of this--log data, IP addresses, and cookie information--makes your search results cleaner and more relevant."

As per Matt cutts IP address and search logs do play a role in judging the quality of results delivered to users. I personally feel this is a good option i know there are some IPs that spam the search engines more than the regular IPs if Google is able to monitor the IPs pretty well they can effectively block automated queries and this can be used in search algorithm. Some keywords will always be spammed more than others, Google as they say can use these type of tracking to impose more filters to those kind of phrases. As a person with more than 5 years of experience with search engines i can see Google imposes stronger filters for certain phrases than others. For keywords like Cancer, mesothelioma more gov, org authority sites rank which for keywords like auto transport, real estate commercial sites do a better job. Really enjoy the way the results are displayed since i don't like to see a commercial site when i search for medicine related information. Most of the time commercial sites provide much lesser value to users than non-commercial sites. There are areas where we need to see more commercial sites and there are areas that needs more information sites to be dominant. Only way to get this right is to check through the hysterical data and search logs and see what keywords are searched more from where, what the user did after clicking the data etc. User tracking can be done effectively using strong filters and effective methods.

Search Engines are facing problems every day. Apart from Web spam and search engine spam they see DDOS attacks, excessive bot activity, scrappers etc. To stand on top they need to keep working on stronger methods to combat spam.

Labels: Google, search engines, Spam

Martin Buster Good post on Brett's Link theme pyramid.

"1. Anchor text should match the page it's linking to. If the anchor says red widgets, particularly for a page meant to convert for red widgets, it should have the phrase red widgets on the page.

I know some people will say this opens you up to OOP but I think as long as there are variations in the links, then you're good to go. Because of the natural non-solicited links I've received on some sites, I've become a believer in the ability of the linking sites relevance to a query being able to transfer over to the linked-to page.

Why would one consider a page about red and blue widgets to be relevant for blue widgets? Looking at it from the point of view of relevance to the query, does it make sense to return a page about red and blue when the user is looking for blue? Looking at it from the point of conversions, if someone is querying for blue doesn't it make sense to return a page dedicated to blue?

PPC advertisers understand the value of having a landing page that matches the query. PPC advertisers understand the value of an optimized ad for inspiring targeted and converting click-through. Organic SEO should follow suit. A dedicated organic page can utilize a specific title and meta description for the same purpose. This means building specific links to specific pages.

I don't think it's adequate for the search user to query babysitting for boys and get a page for babysitting in general. So why build links to a general page when a specific page will not only be more relevant but convert better?

2. Hubs Getting back to Brett's theme pyramid, imo general anchors should point to general pages. Specific anchors should point to the specific pages. I don't understand why people are trying to obtain specific anchors to general pages.

Why are hub pages being created that are simply a big page-o-links to specific pages? Hubs are great starting points, imo they should be more than a page of links. I think this is especially critical for e-commerce where high level topics include brands or kinds of products and sub-pages include models or specific manufacturers.

These second level pages can be cultivated to perform for more general terms, but also in conjunction with, for example buy-cycle long tail phrases like reviews, comparison, versus, etc. Take that into account for the link building.

3. Is the home page really the most relevant page of the link? Here is another place where link building is wasted, imo. I think it makes sense to focus on relevance/links to supporting pages that then create a groundswell of relevance back to the home page for the more general terms.

Reviewing affiliate conversions and AdSense earnings, it's been my experience that specific pages perform better than general home pages. If you're lucky or by design people will click through to the pages they are looking for. But shouldn't you be showing those pages to the user first? And don't you think the search engines want to show those specific pages too? I think this may explain some ranking drops some people are experiencing for home pages that used to rank for multiple terms.

4. Longtail Matching This is where on page SEO comes into play. This refers to geographic and buy-cycle phrases. Building partial matches works, imo. Someone showed me a site that was a leader in specific searches but those pages would perform better if they had the names of cities and provinces on the page. Ranking for Babysiting for Boys is fine, but Babysiting for Boys + (on page) Tampa is better. "

Source: webmasterworld.com/link_development/3676520.htm

Labels: Search Engine Optimization, search engines

Losing Viacom Lawsuit might cost 250$ Billion for Google.

Google responded strongly in their counter filing in Court. Google's filing says "By seeking to make carriers and hosting providers liable for Internet communications, Viacom's complaint threatens the way hundreds of millions of people legitimately exchange information, news, entertainment, and political and artistic expression," . We strongly supported Google's claim.

Doing further research on Viacom's claim we were able to find some hard core facts. What currently looks like a small lawsuit might burst into a major Nuke explosion if Google ever looses the lawsuit. Viacom claims Youtube has more than 150,000 copyright videos and it claims a Billion dollar for it. So what happens if Google wins this lawsuit i am sure other companies won't sit quite.

Companies like CBS, NBC will jump in and will claim their own damages like this Google has to faces lots of companies around the world. I am sure just a lost lawsuit in Youtube will cost Google 50 Billion dollars if other companies claim their share.

Google is a search engine which was developed using other web site's information in their search results. They do have a cache of copyrighted pages crawled on the web so if Youtube is wrong then Google search is wrong too and with all the billions of sites out there Google will go Bankrupt. I estimate Google will need to pay 200 Billion Dollars. Google news also faced a recent major lawsuit from Belgium newspaper group. They want more than 75 million dollars since their news appeared in Google news.

Its Seems Everyone needs a piece of the Google PIE.

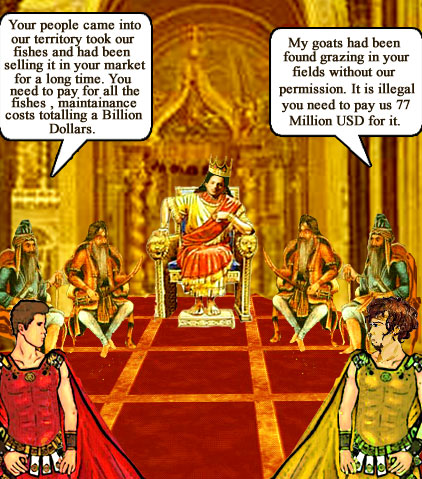

Look the following setup of images i tried to portray the 2 lawsuits.

This image shows Google Kingdom and does it look like Sergey brin on the King's chair ;-)

This is Google country guys fishing in Viacom territory. Is Google responsible for this?

Google country guys selling fishes that are taken from Viacom territory river.

This image criticises the Belgium Newspaper group. Goats from the Belgium Newspaper group territory are grazing in Google's territory.

Google's Market share / wealth

Viacom and Belgium Newspaper claim

Vijay

Labels: Search Engine Cartoons, search engines, Webmaster News

How Google handles Scrapers - Nice information from Google team

We tackle 2 types of dupe content problems one within a site and other with external sites. Dupe content within a site can easily be fixed. I am sure we have full control over it. We can find all potential areas which might create 2 pages of same content and prevent one version from crawling or remove any links to those pages which might be duplicates.

External sites are always a problem since we don't have any control over it. Google says they are now effectively tracking down potential duplicates and give maximum credit to the Original source and filter out rest of the duplicates.

If you find a site which is ranking above you using your content Google says

- Check if your content is still accessible to our crawlers. You might unintentionally have blocked access to parts of your content in your robots.txt file.

- You can look in your Sitemap file to see if you made changes for the particular content which has been scraped.

- Check if your site is in line with our webmaster guidelines.

Labels: Google, search engines

Is Microsoft indirectly going for a Proxy fight?

On February 21, 2008, BLB&G, on behalf of Plaintiffs, filed a Class Action Complaint against Yahoo and its board of directors (the "Board"), alleging that they have acted to thwart a non-coercive takeover bid by Microsoft, which would provide a 62% premium over Yahoo's pre-offer share price, and have instead approved improper defensive measures and pursued third party deals that would be destructive to shareholder value. Yahoo's "Just Say No to Microsoft" approach is a result of resentment by the Board, and not any good faith focus on maximizing shareholder value. Microsoft attempted to initiate merger discussions in late 2006 and early 2007, but was rebuffed, supposedly so Yahoo's management could implement existing strategic plans. None of those initiatives improved Yahoo's performance. On February 1, 2008, over a year after its initial approach, Microsoft returned, offering to acquire Yahoo for $31 per share, representing a 62% premium above the $19.18 closing price of its stock on January 31, 2008.Looks like the proxy fight is about to move forward more aggressively. Lets wait and see

Labels: Microsoft, search engines, Yahoo

Yahoo index update May 2008 - Yahoo search engine algorithm and ranking update

"We'll be rolling out some changes to our crawling, indexing and ranking algorithms over the next few days, but expect the update will be completed soon. As you know, throughout this process you may see some ranking changes and page shuffling in the index. "

Labels: search engines, Yahoo

Can links from foreign sites hurt your Google US rankings?

Google ofcourse geo-targeting buy they also have ways to relate links to sites regardless of where they come from. If your site is hosted in US and have enough US backlinks i wouldn't worry about links from other sites.

Its very normal to get backlinks from other language sites especially if you run an information site. We get ton of backlinks from Japanese, swedish, german, spanish and lots and lots of other language sites still our rankings never moved. So i wouldn't worry about it. Just keep improving your site and always lookout for natural backlinks it will be a hit.

Labels: Search Engine Optimization, search engines

Viacom's threat might kill internet freedom - Youtube lawsuit

"Viacom claimed YouTube consistently allowed unauthorised copies of popular television programming and movies to be posted on its website and viewed tens of thousands of times.

It said it had identified more than 150,000 such abuses which included clips from shows such as South Park, SpongeBob SquarePants and MTV Unplugged.

The company says the infringement also included the documentary An Inconvenient Truth which had been viewed "an astounding 1.5 billion times". "

According to the document:

threatens the way hundreds of millions of people legitimately exchange information

I agree with above Google's claim. The whole Internet is built upon sharing information with each other just because someone illegal posted a copyrighted video on a popular video hosting site it doesn't mean video site is responsible for it. If this is the case then there wont be any Forums or blogs or message boards out there. Then every site that runs a message board will have to verify each and every comment posted since some of them might be copyrighted.

Dow Jones: "When we filed this lawsuit, we not only served our own interests, we served the interests of everyone who owns copyrights they want protected."

Well i am not sure what is the interest of other copyright owners i feel Dow jones comments should be for Viacom only. Google's policy has always been don't be evil they grew up with that motto. Today they are way ahead of all other Search Engines because users are their No.1 priority. Even though Google's Search engine indexes millions of copyrighted pages and stores in their database still no-one complains since they are done what is best for Internet users.

Video sharing websites are a great way to share legitimate information across Internet. Just because someone posted some copyrighted videos its not fair to blame Youtube totally.

Well, I can give a number of reasons why Viacom is completely wrong with their lawsuit.

1. If Youtube is suppose to verify all millions of videos posted in their site they cannot run a site at all. There are so many areas where legitimate information is shared like forums, blog comments, news sites etc. If every single information needs to be verified then there won't be any good information sites on the web.

2. The word "copyright" itself will kill the way Internet works. Today anything and everything is copyrighted and its impossible to share legitimate information unless they are verified. I feel Copyright kills Internet freedom and there should be New laws for Internet information sharing which will help freedom of sharing.

3. After they faced lawsuit last year Google added automated copyright detection tool which stops some copyright videos from being uploaded. This is a very legitimate move and the best Youtube can do to prevent copyright videos. It also shows Google or Youtube don't want copyrighted videos on their sites.

4. Youtube has a very clear copyright policy on videos hosted by them. If you read the following

Commercial Content Is Copyrighted

The most common reason we take down videos for copyright infringement is that

they are direct copies of copyrighted content and the owners of the copyrighted

content have alerted us that their content is being used without their

permission. Once we become aware of an unauthorized use, we will remove the

video promptly. That is the law.

Some examples of copyrighted content

are:

TV shows

Including sitcoms, sports broadcasts, news broadcasts,

comedy shows, cartoons, dramas, etc.

Includes network and cable TV,

pay-per-view and on-demand TV

Music videos, such as the ones you might find

on music video channels

Videos of live concerts, even if you captured the

video yourself

Even if you took the video yourself, the performer controls

the right to use his/her image in a video, the songwriter owns the rights to the

song being performed, and sometimes the venue prohibits filming without

permission, so this video is likely to infringe somebody Else's rights.

Movies and movie trailers

Commercials

Slide shows that include

photos or images owned by somebody else

A Few Guiding Principles

It

doesn't matter how long or short the clip is, or exactly how it got to YouTube.

If you taped it off cable, videotaped your TV screen, or downloaded it from some

other website, it is still copyrighted, and requires the copyright owner's

permission to distribute.

It doesn't matter whether or not you give credit

to the owner/author/songwriter—it is still copyrighted.

It doesn't matter

that you are not selling the video for money—it is still copyrighted.

It

doesn't matter whether or not the video contains a copyright notice—it is still

copyrighted.

It doesn't matter whether other similar videos appear on our

site—it is still copyrighted.

It doesn't matter if you created a video made

of short clips of copyrighted content—even though you edited it together, the

content is still copyrighted.

You can see they are very clear about copyright and even provide links for copyright complains.

5. As of March there are over 3 Billion videos hosted in Youtube. Viacom claim 150,000 copyrighted videos which is only a fraction of the overall videos posted in Youtube. How can you expect youtube to check through this rubble and find copyrighted videos when its actually the user who is suppose to care about this.

6. Most of the time quality of videos posted in Youtube are very bad and they are also very short videos. I feel its not a big deal for small videos of low quality posted in youtube for viewing will kill media Business like Viacom altogether.

7. Some people suggest effective verification system like asking for Credit Card details to post videos. Well i know so many people who are not willing to post their CC information for legitimate online buying how can you expect them to give their CC details just for uploading videos this is a bit too much and anything like this will stop legitimate users from enjoying the freedom of Internet.

Viacom should withdraw their lawsuit to avoid humiliation in court, i feel there wont be anything legitimate against Youtube or Google which will prove Viacom's claim. If Viacom ever win this lawsuit which looks highly unlikely the way the whole Internet works will change and everyone will start suing each other which will be a disaster for Internet.

Lets all stand behind Youtube and Google and wish them success with their defence. Youtube should come out clear from this for the welfare of Internet.

Search Engine Genie

Labels: Google, search engines

Search Engine / SEO events visit us to know the happenings in SEO industry

Check out the currently updated events here,

https://www.searchenginegenie.com/search-events/month.php

Labels: search engines, Search Events

Jill whalen and the nitty gritty of copywriting cartoon

Here is Jill Whalen ready to Teach copywriting in her class.

Labels: search engines, seo cartoons

SEOmoz can i have an optout / Unsubscribe link please

"You may have seen our launch of our new SEO Video Training Series on the

blog last week. Dozens of people took advantage of our special

introductory pricing and the feedback on the series has been fantastic. We want

to make sure everybody who wants to learn sound SEO fundamentals can get their

hands on this awesome training product, so we've got a special offer for

you.

We promised we wouldn't offer the ridiculous Introductory Price of

nearly 40% off again, but here's what we can do: make it more affordable than

ever for you to get the SEO Video Training Series at the PRO Member price of

$199 ($200 off of the Retail Price of $399)...

Until Saturday, May 3rd, you

can get the complete SEO Video Training Series AND 1-Year of SEOmoz PRO

Membership for only $499! Purchased Separately, these items would

cost $798. Even with the PRO discount on the Video Series, you'd still pay $648

for both. Look at it either way you want, you're saving a minimum of $99!

To

take advantage of this awesome, limited-time offer, simply go to the SEOmoz

Store and add a 1-Year SEOmoz PRO Membership and The SEO Video Training Series

to your shopping cart, then use the Coupon Code COMBO50 during checkout to

receive your special price on this great package.

Cheers, The SEOmoz

Team

PS-Remember, this offer is only available for Three Days, until

Saturday, May 3rd!

PPS-If you're thinking of attending SMX Advanced in our

hometown of Seattle, PRO Membership will get you 10% off (early bird rates still

apply!) as well as an invite to our incredible party (check out what happened

last year)!

This email was sent by: SEOmoz4314 Roosevelt Way NE Seattle, WA,

98105, USA"

Update profile:

Ok i dont see an opt out link in this email. When i click on update profile i am taken to the login page where i have to find the email settings and then i need to remove tick to opt out. Why do i need to do this? . I think SEOmoz should ask whether i opt in for commercial emails from them when i register.

i don't want to receive couple of commercial emails and then run and find a way to opt-out,

Thanks for understanding

Search Engine Genie.

Labels: search engines, Spam

Digitalpoint Forums Down due to overactivity

"

Database error

The database has encountered a problem.

Please try the following:

Load the page again by clicking the Refresh button in your web browser.

Open the forums.digitalpoint.com home page, then try to open another page.

The forums.digitalpoint.com forum technical staff have been notified of the error, though you may contact them if the problem persists.

We apologise for any inconvenience."

Its very rare to see such popular forum go down. I feel its mostly due to over activity recently Google made a Pagerank update. Most of the visitors in Digital point forum are very addictive and passionate about Pagerank updates. I feel this pagerank update made a crazy impact on their server and it let to database crash.

I think we should be hearing from soon about this,

Search Engine Genie,

Labels: search engines, SEO Forum

Most recent SEO related Informative Thread in Google Groups.

I recommend everyone to read this thread and understand how a webmaster was able to over come his penalty and get back his ranking in Google. Accept you are wrong and Google is always ready to forgive. We had a client who came to us with a penalized site. They bought links from TLA and Google has detected those links and imposed some sort of penalty. We reviewed the site and recommended client to remove all paid links and file a re inclusion. You know what his answer was?

"Google is the looser since it has banned his site. Google is unlucky because it doesn't have his company site in their index" . Come on client if not your site Google has millions of sites to index and they are not worried if one aggressive site is not in their index even though it might be world's best. It took us lot of time to convince him to clean up paid BLs. Finally he did but still he was not ready to give up his Ego and submit re inclusion. We on behalf of him filed a re inclusion and finally the site came back to Good top rankings. He was all praises for us but the truth we didn't do anything all we did was explain in detail mistakes that was previously done on site and said that it will never be repeated again. Google accepted our words and included the site so lesson learnt if you want Google to send free traffic for your site play by their guidelines if you going to spam them face the consequences.

Read that thread again its better than my voice

Labels: Search Engine Optimization, search engines, Spam

SEO for Beginners download powerpoint slideshow

Labels: Search Engine Optimization, search engines, SEO Tools

Matt Cutts Warns Against Buying Links

Regarding buying links what we do is we try to tackle things algorithmically but we also try things that are scalable and robust. So when I refer both algorithm and user both actually has to go well. So its more on the line to give people the heads up yeah we do consider buying links outside our guidelines and I just sort of notify that we may take strong action against sites in future.

So people want to do that they always have the rights if you are the webmaster its your site you can do what ever you want to do on your site I totally support that idea but we Search Engine think and decide what we feel is best to return a high quality index. So people want to co-operate with Google and users and try to do things in a way that is good for users , good for them and good for the search engines that's fantastic. And we will try to return the best results we can.

Labels: Mattcutts Video Transcript, search engines

Percentage of share for top 500 websites over other sites in internet

In my Opinion No, Alexa users are mostly site owners, webmasters, techies etc. Mostly its a webmaster baised traffic its never a reliable one to gauge the real traffic to a website. Though too much baised still Alexa is a good place to start. Some the sites that are listed in Alexa top 500 websites are the best in internet. So I wouldn't complain too much on alexa.

If you do a Google search for https://www.google.com/search?hl=en&q=top+internet+websites

top websites you will come across a bunch of good lists which will give the top sites in internet. But its very difficult to understand and analyze what type of traffic a real site gets unless those sites care to share their log data which I feel is never possible

Search Engine Genie

Labels: search engines, website

Googlebot now digs deeper into your forms - Great new feature from Google smart guys

Remember forms had always been a user only feature when we see a form we tend to add a query and search for products or catalogs or other relevant information. For example if we go to a product site we just see a search form, Some product sites will just have a form to reach the products on their website. There will not be any other way to access the inner product pages which might have valuable information for the crawlers. Good product descriptions which might be unique and useful for users will be hidden from t he users. Similarly imagine a edu website I personally know a lot of Edu websites which don't provide proper access to their huge inventory of research papers, PowerPoint presentations etc.

Only way to access those papers is through a search button in Stanford website, you can see at least 6000 useful articles about Google which are in Stanford site. If you scan through Standford website you will not find these useful information connected to the website anywhere. They are rendered directly from a database. Now due to the advanced capability of Google bot to crawl forms they can use queries like Google research etc in sites like Standford and crawl all the PDFs, PowerPoint's and other features that are listed there. This is just amazing and a great valuable addition.

I am personally enjoying this addition by Google. When I go to some great websites they are no where never optimized most of their high quality product pages or research papers are hidden from regular crawlers. I always thought why don't I just email them asking to include a search engine friendly site map or some pages which has a hierarchical structure to reach inner pages. Most of the sites don't do this nor do they care that they don't have it. At last Google has a way to crawl the Great Hidden web that is out there. When they role out this option I am sure it will be a huge hit in future and will add few billion pages more to the useful Google index.

Also the Webmaster central blog reports Google bot has the capability to toggle between Radio buttons, drop down menus, check box etc. Wow that is so cool wish I was part of the Google team who did this research it is so interesting to make a automated crawler do all this Magic on your website which has always been part of the user option.

Good thing I noticed is they mention they do this to a select few quality sites though there are some high quality information out there we can also find a lot of Junk. I am sure the sites they crawl using this feature are mostly hand picked or if its automated then its subject to vigorous quality / Authority Scoring.

Another thing that is news to me is the capability of Google bot to scan Javascript and flash to scan inner links. I am aware that Google bot can crawl flash but not sure how much they reached with Javascript. Before couple of years Search Engines stayed away from Javascript to make sure they don't get caught in some sort of loop which might end up in crashing the server they are trying to crawl. Now its great to hear they are scanning and crawling links in Javascript and Flash without disturbing the well-being of the site in anyway.

Seeing the positive site we do have a negative side too, There are some people who don't want their pages hidden inside forms to be crawled by Search Engines. For that ofcourse google crawling and indexing team has a solution. They obey robots.txt, nofollow, and noindex directives and I am sure if you don't want your pages crawled you can block Googlebot from accessing your forms.

A simply syntax like

Useragent: Googlebot

Disallow: /search.asp

Disallow: /search.asp?

will stop your search forms if your Search form name is search.asp.

Also Googlebot crawls only get Method in forms and no Post Method in forms. This is very good since many Post method forms will have sensitive information to be entered by ther users. For example many sites ask for users email IDs, user name, passwords etc. Its great that Googlebot is designed to stay away from sensitive areas like this. If they start crawling all these forms and if there is a vulnerable form out there then hackers and password thieves will start using Google to find unprotected sites. Nice to know that Google is already aware of this and is staying away from sensitive areas.

I like this particular statement where they say none of the currently indexed pages will be affected thus not disturbing current Pagerank distribution:

"The web pages we discover in our enhanced crawl do not come at the expense of

regular web pages that are already part of the crawl, so this change doesn't

reduce PageRank for your other pages. As such it should only increase the

exposure of your site in Google. This change also does not affect the crawling,

ranking, or selection of other web pages in any significant way."

So what next from Google they are already reaching new heights with their Search algorithms, Indexing capabilities etc. I am sure for the next 25 years there wont be any stiff Competition for Google. I sincerely appreciate Jayant Madhavan and Alon Halevy, Crawling and Indexing Team for this wonderful news.

What is the next thing I expect Googlebot :

1. Currently I dont seem them crawl large PDFs in future I expect to see great crawling of the huge but useful PDFs out there. I would expect a cache to be provided by them for those PDFs.

2. Capability to crawl Zip or Rar files and find information in it. I know some great sites which provide down loadable research papers in .zip format. Probably search engines can read what is inside a zipped file and if its useful for users can provide a snippet and make it available in Search index.

3. Special capabilities to crawl through complicated DHTML menus and Flash menus. I am sure search engines are not anywhere near to doing that. I have seen plenty of sites using DHTML menus to access their inner pages, also there are plenty of sites who use Flash menus I am sure Google will overcome these hurdles , understand the DHTML and crawl the quality pages from these sites.

Good Luck to Google From - Search Engine Genie Team,

Labels: Google, search engines

Search Engine Friendly headers - Headers that help search engine rankings.

1) 2oo OK: This is the primary headers that needs to be for all working sites. Search engines will understand 200 ok to be ideal for crawling and indexing a site.

2. ) 301 Permanent redirect: This is again a search engine friendly header return, 301 redirect is used where you want to permanently move a particular page to an other page or an other URL. This is search engine friendly since your redirect won't affect any search engine crawlers since they will understand that your page has moved to a new location.

3. 503 status code: 503 is best to prevent search engine crawlers from crawling your website If your site is hacked or attached by a virus best is to prevent the search engines from crawling , Google's webmaster central blog has some useful information on that here.

Search Engine Genie

Labels: Search Engine Optimization, search engines

Search engines love fresh contents - New contents a gift for search engines.

I recommend keep updating the site regularly add a blog / article or news section and constantly update this area with fresh information so that search engines when ever they come to your site finds fresh information. To get the best results from the updated information make sure you write it unique, content syndication from other blogs or news sites dont work to the best. Unique information is a hit with search engines they love to see great information from your website that is fresh for their users. Our blog postings get crawled in about 2 hours time not more than that. This is because of quality fresh information we provide for the crawlers.

SEO Blog Team.

Labels: Search Engine Optimization, search engines

Catching a bee in a forest full of bees - Unpredictable Search engines.

Before say some 6 years search engines had so less spam to fight against. People are not aware of innovative ideas to spam the search engines all they know is FFA ( Free for all links ) , Keyword stuffing , Hidden keywords / links, html content keyword stuffing ( abusing the loop holes in html to stuff keywords ) . automated link exchanges, comment spamming , cloaking / content delivery etc. At that time since the search engine algorithms were not so complicated all these tactics worked. All these are not anywhere near to working in Search engines any more. All these loop holes were closed but still people spam the search engines

Lets see some 3 year back techniques which are against search engine guidelines.

- Blog Spamming: Spamming blog with comment spam most targeted sites were big University sites like Stanford.edu where they allowed people to post comment for their articles or news section

- Aggressive / Automated Link exchanges: Aggressive Link exchanges were still working but not to the level it used to work around 2002 or 2003. Automated link exchanges became a huge industry and people were using very aggressive link exchanges to gain a upper hand in backlinks

- Forum spamming: Spamming forums through signature links , links in their posts, links through automated forum spam etc. This was a very popular tactics where people just visit forums to have their links in signature or in their post to gain search engine benefit.

- Cross-linking: A major search engine spam where a spammer starts 100s of sites and cross link them to get link benefit in search engines.

- Dmoz clones: Huge number of dmoz clones started arising and became people since it can generate 100s of 1000s of pages instantly for search engines.

- Directory spam huge number directories started coming out with zero value to visitors built just for search engine benefit

- Links from lots of blogs. This was something which came into existing in 2004 but still in existence to a certain extent.

- Links and contents hidden on page, behind images, in noscript tag, in hidden contents etc.

- Spamming wikipedia by inserting links ( Nofollow was introduced mostly for wikipedia ) .

- Text link ads: Buying your way to the top of search engine organic rankings. Buying text links and gaining search engine benefit due to the search engines dependency on backlinks and anchor text power to rank a website.

If I list it out I can keep on listing lots of things people used for spamming the search engines. But if you today most of the above tactics dont work anymore with search engines. Search engine like Google has closed its algorithm for these sort of loop holes. I can tell you with some sort of background with search algorithms. Just to close the above 8 tactics they need to implement 30 different factors. Its not that easy to detect a spam without hurting millions of websites out there who might have something similar to it but is not considered spam.

To combat these problems search engines role our algorithmic changes very carefully after a lot of testing so that it doesn't affect any search rankings of innocent webmasters who never did anything against search engine guidelines. At this point I can imagine atleast 150 factors playing into ranking competitive keywords in Google.

Lets see what's the 2007/2008 search engine spam tactic

Social bookmarking which has picked up so much these days has been a target of search engine spamming for sometime now. People want to bookmark only interesting pages but now everyone bookmarks everything. This is done mostly for search engine benefit. Search engines love sites like delicious or Digg and they tend to crawl links from these sites better. So people tend to target these sites.

Search Engine Spamming in the name of link baiting, Link baiting a very commonly used word these days has been a subject of abuse. People imagining to be creative use some really aggressive methods on their sites to gain natural backlinks. Sometimes it works sometimes it doesn't . Bad PR ( public relations ) is a main problem here. People tend to write crap about others or about other sites so that they can gain the sympathy of the other party. This is definitely not the healthy way for gaining back links.

Pay per post , pay for blogging – an other tactic which was subject to spamming . Now people are buying posts in blogs. They make good bloggers write about their site and provide a back link to them. Most of the time this is just like a paid text link advertisement.

Adding a page on a established site about your site with anchor text backlinks

Reviews on established blogs are similar to Pay per post where you pay a blogger to review your site and link back in return for Money.

1$ articles – This is a very difficult to combat spam where advertisers are paying 1$ for articles which are of very low quality and stuff their site with junk information to show search engines that they have contents.

This is just a small list of new ideas to spam search engines. I can go on to list more so we cannot blame the search engines in anyway for doing something like this.

One wonderful thing is the death of Anchor Text link advertising in 2007/2008. I am personally Happy about it. It makes the rich and famous dominate Search Results. Its not anymore the case. Search Engines dont see text link advertising as a search engine friendly one and are ready to impose strong penalties for sites that buy or sell links. Though Search engines like Google like to fight search engine spamming algorithmically I am sure some manual review especially on paid links or renting links will bring more success and quality to the index.

Considering all these new tactics coming up I am sure in future Search Engine Optimization is going to be one of the hardest industry to work on. Saying that if there are no innovating ideas to get a site ranked I am sure search engine quality engineers will go Jobless. So let us keep them working harder to fight search engine spamming algorithmically.

Search Engine Genie.

Labels: search engines

Are you in wikipedia's spam blacklist,

meta.wikimedia.org/wiki/Spam_blacklist

Wikipedia has a strong relationship with Search engines, Wikipedia does share this information to Search engines and it will result in your site loosing credibility with search engines.

Mattcutts of Google denies any automated penalty

"

If you do a search for [wikipedia spam blacklist], the first result is helpful. It gives pointers to various strings and urls that Wikipedia has blacklisted on their site.

I'd characterize that list as much like a spam report: the data can be useful, but at least in Google it wouldn't automatically result in a penalty (for the reason that site A might be trying to hurt site B).

That could be one of the things jehochman was referring to."

Even if matt denies he does say its kind of like a spam report so I recommend staying out of the list always,

Labels: search engines

Search Engines love webmasters and siteowners

But now its a total different world I would say. Looking at the prompt responses people get from search quality engineers about their site problems it makes me seriously amazed.

For Google we have mattcutts blog, webmaster central blog, adwords rep in webmasterworld forum ,John Muller participating in forums, Mattcutts goes around in webmaster / SEO blogs and responds to any specific problems or concerns ( ofcourse his blog is great ) , we have Google Groups where many employees like John Muller, Jonathan simon, Susan, Mariya etc hangout, Most of the google experts are always around when a webmaster is serious about their problems,

Back in 2003 we just had one ghost like representative from Google named GoogleGuy in webmasterworld who comes around and asks for feedback. If there is some update to their algorithm or search index occasionally he will respond with a lot of ambiguity. His answers are like answers from the Google god itself and his posts are followed so much. People just come to webmaster world to search for his posts. Till today 95% or more webmasterworld active members don't know who the real person with the nickname Googleguy is. Many suspect Googleguy is Mattcutts but he denied to be the original Googleguy. But today things have changed we now have Google employees answering questions all over the place. They recently hosted a webmaster live chat session first of its kind which was a success attended by more than 250 people.

For yahoo it used to be Tim Mayer nick named Yahoo Tim he just comes around and gives a weather update ( Yahoo's index update ) or answers some rare questions but now they have a wonderful place for webmasters to seek help.

This place gives much better support than what Google does. I rarely see a question that is not reviewed by a yahoo site explorer employee. That is a very positive sign and a huge step forward in bringing webmasters/White hat SEOs and site owners closer. Its great that Yahoo a search engine which used to be very reluctant to help webmasters now have a place where they provide instant solution for Site owner's problems.

MSN it used to be MSN dude he started from the day MSN separated from Yahoo to become an independent Search Engine. He comes for some feedback but never used to be regular but now even the big Microsoft has a forum for webmasters to talk about problem with their sites. When I visited this forum I can see people like Brett Hunt a Live search employee actively answering questions of site owners. This is great.

So seeing the world change so much I can just say one thing finally search engines have understood the importance of Good relationship and communication with webmasters and site owners to maintain the quality of the index. A Good communication and setting up strong guidelines help webmasters go in the right direction when it comes to ranking their sites in Search Engines.

I like to thank wholeheartedly all top Search engine Engineers for taking this bold step of helping us webmasters.

Search Engine Genie

Labels: search engines

Acceptable downtime for Search Engines

But that being said if your site is down for a long time then you have reasons to worry. Search engines if they repeatedly hit a site and see that its down they will remove the site from the index or prevent the site from ranking all together. They do this to prevent users from visiting a site from their SERPs ( Search Engine Results Pages ) and returning empty handed . So I recommend if your site is down don't wait too long just make sure the host solves the problem promptly or switch host and change DNS immediately.

This will save a lot of headache with search engines.

Labels: search engines