new Bigdaddy datacenters report huge increase in search result pages

comparing to the previous google the new improved googlebot which powers the bigdaddy datacenters are having huge increase in indexed data, Could this be because of new googlebot's ( google's crawler ) effective way of crawling sophisticated Javascript, flash, frames etc??

A search for *.* showed 9,660,000,000 now its showing 25,270,000,000 ( that's more than 25 billion ) results,

A search for "the" showed 8,660,000,000 now its showing 22,010,000,000

Is new bigdaddy infrastructure datacenter a step ahead in google's crawling technology??????

A search for *.* showed 9,660,000,000 now its showing 25,270,000,000 ( that's more than 25 billion ) results,

A search for "the" showed 8,660,000,000 now its showing 22,010,000,000

Is new bigdaddy infrastructure datacenter a step ahead in google's crawling technology??????

Have Sitemaps killed my site? - interesting thread in webmasterworld

An interesting thread in webmasterworld.com was discussing about a site's pages which went URL only after signing up for google sitemaps, so did google sitemaps affect his site's indexing of pages,

Webmasterworld.com member mrmister says

"I noticed that there are about 8 pages that are URL only. I've never seen this happen before. I did a standard Google search for my "green widgets" category page by searching for [green widgets]. It used to appear somewhere on page 1 but it was no longer there. I clicked to page 2 and I found my "history of green widgets" page sitting at the top of page 2. The "history of green widgets" page is the only subcategory page that is still listed as non-URL only. I checked my logs and Google has yet to crawl this page.

The site itself is a small site (about 80 pages). It's been going in various guises for about 10 years and therefore has a fair amount of inbound links (mainly to internal pages rather than the home page). For most categories it gets to page 1 of the SERPs for two work keyphrases. I gave it a design overhaul about a year back to convert it from tag soup to clean valid HTML and CSS. I changed the linking structure and the URLs using 301s. I also improved the prominence of Adsense ads (that I'd been trailing for about a year previous) It weathered the change fine (some previous number 1 results dropped down a few places but nothing major).

There have been no major changes since then. I've added a few categories. It's all been fine, every page has been indexed in a timely fashion.

However a week ago, I signed up to Google Sitemaps. I am wondering if this is connected in any way with the URL-only pages that I'm starting to see. "

So what could have caused this, Submitting to google sitemaps might have been a coincidence,

Webmasterworld.com member mrmister says

"I noticed that there are about 8 pages that are URL only. I've never seen this happen before. I did a standard Google search for my "green widgets" category page by searching for [green widgets]. It used to appear somewhere on page 1 but it was no longer there. I clicked to page 2 and I found my "history of green widgets" page sitting at the top of page 2. The "history of green widgets" page is the only subcategory page that is still listed as non-URL only. I checked my logs and Google has yet to crawl this page.

The site itself is a small site (about 80 pages). It's been going in various guises for about 10 years and therefore has a fair amount of inbound links (mainly to internal pages rather than the home page). For most categories it gets to page 1 of the SERPs for two work keyphrases. I gave it a design overhaul about a year back to convert it from tag soup to clean valid HTML and CSS. I changed the linking structure and the URLs using 301s. I also improved the prominence of Adsense ads (that I'd been trailing for about a year previous) It weathered the change fine (some previous number 1 results dropped down a few places but nothing major).

There have been no major changes since then. I've added a few categories. It's all been fine, every page has been indexed in a timely fashion.

However a week ago, I signed up to Google Sitemaps. I am wondering if this is connected in any way with the URL-only pages that I'm starting to see. "

So what could have caused this, Submitting to google sitemaps might have been a coincidence,

Vanessa Fox, Google Engineering reports on effect ways of moving domain names,

We had already given good suggestions on moving domains in our blog,

Nothing is more effective than getting information from a google employee, Matt cutts senior google engineer already discussed on 301 redirects ( https://www.mattcutts.com/blog/the-little-301-that-could )

Now vanessa fox reports in sitemaps blog on effective 301 redirect,

Here an extract from google sitemaps blog

"Recently, someone asked me about moving from one domain to another. He had read that Google recommends using a 301 redirect to let Googlebot know about the move, but he wasn't sure if he should do that. He wondered if Googlebot would follow the 301 to the new site, see that it contained the same content as the pages already indexed from the old site, and think it was duplicate content (and therefore not index it). He wondered if a 302 redirect would be a better option. I told him that a 301 redirect was exactly what he should do. A 302 redirect tells Googlebot that the move is temporary and that Google should continue to index the old domain. A 301 redirect tells Googlebot that the move is permanent and that Google should start indexing the new domain instead. Googlebot won't see the new site as duplicate content, but as moved content. And that's exactly what someone who is changing domains wants. He also wondered how long it would take for the new site to show up in Google search results. He thought that a new site could take longer to index than new pages of an existing site. I told him that if he noticed that it took a while for a new site to be indexed, it was generally because it took Googlebot a while to learn about the new site. Googlebot learns about new pages to crawl by following links from other pages and from Sitemaps. If Googlebot already knows about a site, it generally finds out about new pages on that site quickly, since the site links to the new pages. I told him that by using a 301 to redirect Googlebot from the old domain to the new one and by submitting a Sitemap for the new domain, Googlebot could much more quickly learn about the new domain than it might otherwise. He could also let other sites that link to him know about the domain change so they could update their links."

Nothing is more effective than getting information from a google employee, Matt cutts senior google engineer already discussed on 301 redirects ( https://www.mattcutts.com/blog/the-little-301-that-could )

Now vanessa fox reports in sitemaps blog on effective 301 redirect,

Here an extract from google sitemaps blog

"Recently, someone asked me about moving from one domain to another. He had read that Google recommends using a 301 redirect to let Googlebot know about the move, but he wasn't sure if he should do that. He wondered if Googlebot would follow the 301 to the new site, see that it contained the same content as the pages already indexed from the old site, and think it was duplicate content (and therefore not index it). He wondered if a 302 redirect would be a better option. I told him that a 301 redirect was exactly what he should do. A 302 redirect tells Googlebot that the move is temporary and that Google should continue to index the old domain. A 301 redirect tells Googlebot that the move is permanent and that Google should start indexing the new domain instead. Googlebot won't see the new site as duplicate content, but as moved content. And that's exactly what someone who is changing domains wants. He also wondered how long it would take for the new site to show up in Google search results. He thought that a new site could take longer to index than new pages of an existing site. I told him that if he noticed that it took a while for a new site to be indexed, it was generally because it took Googlebot a while to learn about the new site. Googlebot learns about new pages to crawl by following links from other pages and from Sitemaps. If Googlebot already knows about a site, it generally finds out about new pages on that site quickly, since the site links to the new pages. I told him that by using a 301 to redirect Googlebot from the old domain to the new one and by submitting a Sitemap for the new domain, Googlebot could much more quickly learn about the new domain than it might otherwise. He could also let other sites that link to him know about the domain change so they could update their links."

does redirects pass pagerank ?

Many have the question whether redirects pass google pagerank?

Answer:

It depends on the type of redirect handled, if its just a normal 302 redirect then it rarely passes pagerank, if the redirect is 301 then it will pass pagerank,

Passing of pagerank depends on the type of redirect used, if the redirects are hidden inside javascript its impossible for the link to pass pagerank, similarly if the links are from folders blocked by robots file then it wont pass pagerank,

Answer:

It depends on the type of redirect handled, if its just a normal 302 redirect then it rarely passes pagerank, if the redirect is 301 then it will pass pagerank,

Passing of pagerank depends on the type of redirect used, if the redirects are hidden inside javascript its impossible for the link to pass pagerank, similarly if the links are from folders blocked by robots file then it wont pass pagerank,

Google reaching new heights - today's market cap 127 billion USD,

Google has reached new heights, Its market capital is around 127 billion which is huge,

today's stats,

GOOGLE (NasdaqNM:GOOG) Delayed quote data

Last Trade:

428.20

Trade Time:

2:17PM ET

Change:

5.29 (1.22%)

Prev Close:

433.49

Open:

429.39

Bid:

428.10 x 200

Ask:

428.20 x 200

1y Target Est:

476.31

Day's Range:

425.00 - 433.28

52wk Range:

172.57 - 475.11

Volume:

6,620,322

Avg Vol (3m):

11,177,700

Market Cap:

126.55B

P/E (ttm):

94.86

EPS (ttm):

4.51

Div & Yield:

N/A (N/A)

seo blog team,

today's stats,

GOOGLE (NasdaqNM:GOOG) Delayed quote data

Last Trade:

428.20

Trade Time:

2:17PM ET

Change:

5.29 (1.22%)

Prev Close:

433.49

Open:

429.39

Bid:

428.10 x 200

Ask:

428.20 x 200

1y Target Est:

476.31

Day's Range:

425.00 - 433.28

52wk Range:

172.57 - 475.11

Volume:

6,620,322

Avg Vol (3m):

11,177,700

Market Cap:

126.55B

P/E (ttm):

94.86

EPS (ttm):

4.51

Div & Yield:

N/A (N/A)

seo blog team,

Getting banned from adsense for running clickbots,

A webmaster reports in a forum saying that his adsense account was closed because of fraudulent clicks on his site,

He said

"An ex-friend of mine recently created a both. This both visits a site, clicks on a ad, changes its IP and comes back to click again. I was wondering why my adsense revenue for that day shot up so dramatically. My google account has now been banned and revenue lost for several of my sites. Presumably there is no chance of getting my account reinstated? Additionally, whats to stop him running this both on sites he wants to get banned? I am very very unhappy with this guy. "

this is really unfortunate, we recommend everyone monitor their adsense account actively, if they see fraudulent clicks we recommend them to shutdown their ads temporarily, Google is very strict on this issue,

He said

"An ex-friend of mine recently created a both. This both visits a site, clicks on a ad, changes its IP and comes back to click again. I was wondering why my adsense revenue for that day shot up so dramatically. My google account has now been banned and revenue lost for several of my sites. Presumably there is no chance of getting my account reinstated? Additionally, whats to stop him running this both on sites he wants to get banned? I am very very unhappy with this guy. "

this is really unfortunate, we recommend everyone monitor their adsense account actively, if they see fraudulent clicks we recommend them to shutdown their ads temporarily, Google is very strict on this issue,

carcaserdotcom seocontest test page just checking to see how this site ranks in carcaserdotcom seocontest

Just checking to see how this site ranks for carcaserdotcom seocontest organized by carcasher.com , We don't want to exchange links with sites running seocontest, so please don't contact us for link exchange offers for carcaserdotcom seo contest,

1000s of sites will soon jump into this carcaserdotcom contest, good luck to all,

1000s of sites will soon jump into this carcaserdotcom contest, good luck to all,

New Search Engine Optimization (SEO) Contest starting feb 1st 2006

carcasher.com is organizing a new seo contest where the keyword to be ranked is "carcasherdotcom seocontest "

carcasherdotcom seocontest is a weird keyword to be selected, rules of the contest,

SEO Promotion Rules:

No dirty SEO techniques! (read Google Webmaster Guidelines)SEO's caught using any of the following: doorway pages, spam, hidden links, etc. WILL NOT qualify for the prize, and the prize will go to the next best SEO.

Every website HAS TO HAVE an email address published ON THAT SITE so that nobody else can claim your prizes.

We will pay prizes through PayPal, Money Order or CC, whichever applicable.

In order to qualify for the prize, a web page must include ONE of the following:

a) link to the rules of the contest http://www.carcasher.com/SEOContest/, ORb) link to the www.carcasher.com homepage, ORc) the following text (no link) We support CarCasher.Com

Great prizes include

$14,000, 42" Plasma TV, Sony PSP, and iPod in prizesPLUS 12 months $12,000 SEO contract for 2007.

Got the challenge go for it at www.carcasher.com

carcasherdotcom seocontest is a weird keyword to be selected, rules of the contest,

SEO Promotion Rules:

No dirty SEO techniques! (read Google Webmaster Guidelines)SEO's caught using any of the following: doorway pages, spam, hidden links, etc. WILL NOT qualify for the prize, and the prize will go to the next best SEO.

Every website HAS TO HAVE an email address published ON THAT SITE so that nobody else can claim your prizes.

We will pay prizes through PayPal, Money Order or CC, whichever applicable.

In order to qualify for the prize, a web page must include ONE of the following:

a) link to the rules of the contest http://www.carcasher.com/SEOContest/, ORb) link to the www.carcasher.com homepage, ORc) the following text (no link) We support CarCasher.Com

Great prizes include

$14,000, 42" Plasma TV, Sony PSP, and iPod in prizesPLUS 12 months $12,000 SEO contract for 2007.

Got the challenge go for it at www.carcasher.com

Picasa now available in 25 different languages,

Picasa google's image editing software is now available in 25 different languages,

New interface languages for picasa are Bulgarian, Croatian, Czech, Danish, Estonian, Finnish, Greek, Hungarian, Icelandic, Indonesian, Latvian, Lithuanian, Norwegian, Tagalog, Polish, Romanian, Serbian, Slovak, Slovenian, Catalan, Swedish, Thai, Turkish, Ukrainian, and Vietnamese.

Picasa is a great photo organizing software, it is a must download for people who want to organize their photo effectively,

Download picasa here picasa.google.com

New interface languages for picasa are Bulgarian, Croatian, Czech, Danish, Estonian, Finnish, Greek, Hungarian, Icelandic, Indonesian, Latvian, Lithuanian, Norwegian, Tagalog, Polish, Romanian, Serbian, Slovak, Slovenian, Catalan, Swedish, Thai, Turkish, Ukrainian, and Vietnamese.

Picasa is a great photo organizing software, it is a must download for people who want to organize their photo effectively,

Download picasa here picasa.google.com

Can google adsense and yahoo publisher network ads be published on same page,

Everybody who runs contextual ads on their site will have a doubt whether to publish adsense and YPN on same site / page,

Adsense advisor says adsense and YPN can be published on same site but not on same page,

this is what he said in webmasterworld.com

"YPN ads fall are considered competitive ads, as any other contextually targeted ads, or ads that mimic Google ads in appearance. For a more detailed description, see our policies page (http://google.com/adsense/) 'Competitive ads and services'.

While you can use both AdSense and YPN on the same site, our program policies prohibit publishers from using them on the same page. "

Adsense advisor says adsense and YPN can be published on same site but not on same page,

this is what he said in webmasterworld.com

"YPN ads fall are considered competitive ads, as any other contextually targeted ads, or ads that mimic Google ads in appearance. For a more detailed description, see our policies page (http://google.com/adsense/) 'Competitive ads and services'.

While you can use both AdSense and YPN on the same site, our program policies prohibit publishers from using them on the same page. "

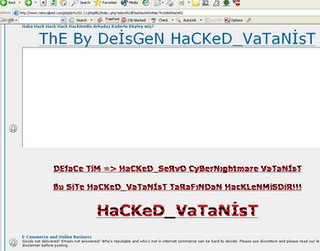

Website penalized due to spamming, - website penalty due to potential spam,

A webmasterworld.com guy reports that his site got banned for using link spam tactics,

he says

Please be careful with google they are pretty strong in detecting link spam,

he says

"My website got penalized by google. (Congratulations to me :-) )Things started

from this JAN (wen Google scheduled to upgrade its PR algo? I am not sure if its

true.) Traffic dropped. So did the earnings. Many people felt changes in their

rankings as well as earnings in this particular month. On te other hand, others

felt more income and traffic (obviously)

Reason of Penalty: I was using my

own automatic Link partnering tool and in few instances posted the link

partnering email on Forums and Blogs too. (accidently)

Google detected this

as "SPAMMING" and the traffic that I am getting on this very website (from

Google only) has decreased drastically.

However, other Search Engines seem

to be BLIND to this "SPAMMING" (Yes! I agree that in some sense this is spamming

what I did). Rather MSN and Yahoo have started sending more traffic.

Though,

the website is a quality website (quality content and all). But my greed to get

more links has put this website down. I will have to relaunch it now.

Furthermore, I will start experimenting and will be enhancing my Link

Partnering tool algorithms to detect these irrelevant websites and filtering out

Blogs and Forums.

Moral of the story: 1 - Google has applied some SERIOUS

SPAM combacting algorithms. Its growing more and more conscious about SPAM. So be

careful. 2 - Other Search Engines are still a lot away from what Google is doing.

I salute Google for their dedication and taking SPAM websites seriously. 3 -

Don't post your Website links on Comments section of Blogs or forums. (which I

did accidently) 4 - Look out for other ways of SPAMMING that Google has become

resistant to. Kindly post them here for further evaluation by WebmasterWorld

community. "

Please be careful with google they are pretty strong in detecting link spam,

Evidence reveals MSN using editors to maintain quality in its search results,

Some of our referral logs show visits from this URLhttp://64.4.8.28/hrsv3/Judging.aspx , at first look it seems like a spam site but it is not the case,

We see a login screen when we visit that URL mshrs.search.msn-int.com/hrsv3/Login.aspx it looks like human review area for MSN search results,

Google has been doing human review using eval.google.com for a longtime,

What googleguy said about eval.google.com

"walkman, your comment illustrates a misconception that I've seen in a couple places. The system that was up at eval.google.com was a console to evaluate quality passively, not to tweak our results actively. But when Henk van Ess submitted his own blog to Slashdot, he asserted "Real people, from all over the world, are paid to finetune the index of Google," and that made it sound like people were reaching in via this console to tweak results directly, which just isn't true at all.

I have serious reservations about Henk van Ess taking information from one of his own students (who presumably signed a non-disclosure agreement when the student agreed to help rate the quality of our results) and posting that information online. I also believe these web pages said things like "Google Proprietary and Confidential," but it appears that the screenshots have been cropped to exclude that information. Those are the two things that really made me sad, not the "breaking news" the Google evaluates its own results quality. It shouldn't be a surprise that Google evaluates the quality of its results in lots of ways--the fact is that every major search engine evaluates its relevance in many ways. "

I said

But when Henk van Ess submitted his own blog to Slashdot, he asserted "Real people, from all over the world, are paid to finetune the index of Google," and that made it sound like people were reaching in via this console to tweak results directly, which just isn't true at all. and you replied

Google Guy, do I read between the lines that you think my postings are irrelevant and misleading? That would be a shame.

I don't believe they're irrelevant, but yes: I do believe that the assertions you've made are misleading. In my original post, I was replying to walkman, who asked "ok, so how do you know you've been manually hit by this?" which implies that walkman thought that eval.google.com was responsible for sites being hit. Likewise, I have a ton of respect for Tara Calashain at ResearchBuzz. But her post about your site says "Basically what Henk seems to have found is a part of Google that allows humans to tweak search results to ostensibly get rid of spam and let the most contextually-relevant search results rise to the top." Again, Tara wonders whether your posts said that results were being directly tweaked. Then there are assertions from your site like "The Google testers are paid $10 - $20 for each hour they filter the results of Google." "Filter" again makes it sound like an active process. And your self-submission to Slashdot ("Real people, from all over the world, are paid to finetune the index of Google"), which also gives the impression that people used eval.google.com to change our search results.

So yes, I looked at the wording from when you submitted your own site to Slashdot, plus the use of active verbs such as "filter" on your own site, plus the comments of smart people such as Tara and walkman and how they interpreted what you wrote, and in my opinion your posts have been misleading. Again, this was not a console in which people could directly fine-tune, tweak, filter, or otherwise modify our search results. eval.google.com was for "eval," i.e. passive evaluation.

Your follow-up question was "Why pay them for something if it has no effect om the index? Must be charity then." Why are you surprised that we would pay people to rate search results? The job posting has been public, after all. We do provide ways for people to volunteer to help Google (e.g. see our translation console at http://services.google.com/tc/Welcome.html ), but to rate search results consistently and well takes time and training. I think it's perfectly normal to pay people for their time.

When you quoted me on your site, you said "Google Guy: I've serious reservations about Henk van Ess" and in your post you said "Google's spokesmen Google Guy, who I love to read, has serious reservations about me." Just to be clear, that's not accurate: I don't have reservations about you personally, Henk. I think I stated clearly that I have serious reservations about two of your actions. I mentioned those two specific things in my first post, and I'll reiterate them: you took information from one of your students, and you posted information that (in my opinion) was clearly proprietary/confidential. Regarding the first, I believe you wrote in a comment on your own site that this information came from a student of yours? Regarding the second, I'm quite surprised that you assert "I'm not aware of restrictions." Besides the copyright symbol that you mentioned earlier, the very first picture you posted has a link "An NDA Reminder..." on the left in the Important Announcements section, where NDA stands for non-disclosure agreement. Are you honestly saying that if you had realized there were restrictions, you wouldn't have done five blog posts (so far), posted screenshots, posted employee's real names on the web without consulting them, and posted two training documents? In that case, I'll ask politely. Henk, this information was for ratings training. It's copyrighted, and I'm sure that the evaluation group considers it proprietary/confidential. I'd appreciate it if you would stop posting these documents.

By the way, I apologize in advance if this post comes across as strident. I hate he-said-she-said stuff, and normally I try not to post when I'm at ruffled at all. But I do think that things like posting an innocent employee's name from internal training documents is rude and unnecessary. Henk, feel free to include this entry on your blog, but if you do, I'd appreciate if you'd quote the entire post.

then we have yahoo's human review of search results, we can see referrals from corp.yahoo domain

now we have MSN human review of search, I think its mostly for quality control purpose? anyway good to see they are hand reviewing search, their results are spammed a lot by search engine spammers,

We see a login screen when we visit that URL mshrs.search.msn-int.com/hrsv3/Login.aspx it looks like human review area for MSN search results,

Google has been doing human review using eval.google.com for a longtime,

What googleguy said about eval.google.com

"walkman, your comment illustrates a misconception that I've seen in a couple places. The system that was up at eval.google.com was a console to evaluate quality passively, not to tweak our results actively. But when Henk van Ess submitted his own blog to Slashdot, he asserted "Real people, from all over the world, are paid to finetune the index of Google," and that made it sound like people were reaching in via this console to tweak results directly, which just isn't true at all.

I have serious reservations about Henk van Ess taking information from one of his own students (who presumably signed a non-disclosure agreement when the student agreed to help rate the quality of our results) and posting that information online. I also believe these web pages said things like "Google Proprietary and Confidential," but it appears that the screenshots have been cropped to exclude that information. Those are the two things that really made me sad, not the "breaking news" the Google evaluates its own results quality. It shouldn't be a surprise that Google evaluates the quality of its results in lots of ways--the fact is that every major search engine evaluates its relevance in many ways. "

I said

But when Henk van Ess submitted his own blog to Slashdot, he asserted "Real people, from all over the world, are paid to finetune the index of Google," and that made it sound like people were reaching in via this console to tweak results directly, which just isn't true at all. and you replied

Google Guy, do I read between the lines that you think my postings are irrelevant and misleading? That would be a shame.

I don't believe they're irrelevant, but yes: I do believe that the assertions you've made are misleading. In my original post, I was replying to walkman, who asked "ok, so how do you know you've been manually hit by this?" which implies that walkman thought that eval.google.com was responsible for sites being hit. Likewise, I have a ton of respect for Tara Calashain at ResearchBuzz. But her post about your site says "Basically what Henk seems to have found is a part of Google that allows humans to tweak search results to ostensibly get rid of spam and let the most contextually-relevant search results rise to the top." Again, Tara wonders whether your posts said that results were being directly tweaked. Then there are assertions from your site like "The Google testers are paid $10 - $20 for each hour they filter the results of Google." "Filter" again makes it sound like an active process. And your self-submission to Slashdot ("Real people, from all over the world, are paid to finetune the index of Google"), which also gives the impression that people used eval.google.com to change our search results.

So yes, I looked at the wording from when you submitted your own site to Slashdot, plus the use of active verbs such as "filter" on your own site, plus the comments of smart people such as Tara and walkman and how they interpreted what you wrote, and in my opinion your posts have been misleading. Again, this was not a console in which people could directly fine-tune, tweak, filter, or otherwise modify our search results. eval.google.com was for "eval," i.e. passive evaluation.

Your follow-up question was "Why pay them for something if it has no effect om the index? Must be charity then." Why are you surprised that we would pay people to rate search results? The job posting has been public, after all. We do provide ways for people to volunteer to help Google (e.g. see our translation console at http://services.google.com/tc/Welcome.html ), but to rate search results consistently and well takes time and training. I think it's perfectly normal to pay people for their time.

When you quoted me on your site, you said "Google Guy: I've serious reservations about Henk van Ess" and in your post you said "Google's spokesmen Google Guy, who I love to read, has serious reservations about me." Just to be clear, that's not accurate: I don't have reservations about you personally, Henk. I think I stated clearly that I have serious reservations about two of your actions. I mentioned those two specific things in my first post, and I'll reiterate them: you took information from one of your students, and you posted information that (in my opinion) was clearly proprietary/confidential. Regarding the first, I believe you wrote in a comment on your own site that this information came from a student of yours? Regarding the second, I'm quite surprised that you assert "I'm not aware of restrictions." Besides the copyright symbol that you mentioned earlier, the very first picture you posted has a link "An NDA Reminder..." on the left in the Important Announcements section, where NDA stands for non-disclosure agreement. Are you honestly saying that if you had realized there were restrictions, you wouldn't have done five blog posts (so far), posted screenshots, posted employee's real names on the web without consulting them, and posted two training documents? In that case, I'll ask politely. Henk, this information was for ratings training. It's copyrighted, and I'm sure that the evaluation group considers it proprietary/confidential. I'd appreciate it if you would stop posting these documents.

By the way, I apologize in advance if this post comes across as strident. I hate he-said-she-said stuff, and normally I try not to post when I'm at ruffled at all. But I do think that things like posting an innocent employee's name from internal training documents is rude and unnecessary. Henk, feel free to include this entry on your blog, but if you do, I'd appreciate if you'd quote the entire post.

then we have yahoo's human review of search results, we can see referrals from corp.yahoo domain

now we have MSN human review of search, I think its mostly for quality control purpose? anyway good to see they are hand reviewing search, their results are spammed a lot by search engine spammers,

Yahoo update - yahoo updates its search index on 23rd january

Webmasterworld.com guys report yahoo update webmasterworld.com/forum35/3814.htm

check your rankings with our yahoo rank checker

https://www.searchenginegenie.com/yahoo-rank-checker.html

check your rankings with our yahoo rank checker

https://www.searchenginegenie.com/yahoo-rank-checker.html

SEO Advice: Spell-check your web site - a typical Joke from Matt cutts

Matt cutts seem to be in happy mode today when he blogged about a site which offers 100% money back guarantee, The site which posted this offer has a banner which has typo errors, so how do you get business when your banner has spelling errors, Nice that matt noted this,

See spelling error in this message,

See spelling error in this message,

Boris Floricic shuts down german wikipedia even after his death,

Boris Floricic was a german hacker and phreaker, He died a mysterious death in the year 1998, Many reported his death was a suicide, some members of his family reported he was murdered by an intelligent agency,

read more on the wikipedia entry here - Boris floricic hacker

the german wikipedia.de reads as follows due to court rules

"Liebe Freunde Freien Wissens,

durch eine vor dem Amtsgericht Berlin-Charlottenburg am 17. Januar 2006 erwirkte einstweilige Verfügung wurde dem Verein Wikimedia Deutschland – Gesellschaft zur Förderung Freien Wissens e.V. untersagt, von dieser Domain auf die deutschsprachige Ausgabe der freien Enzyklopädie Wikipedia (wikipedia.org) weiterzuleiten.

Wir lassen derzeit durch unsere Rechtsanwälte alle möglichen Schritte prüfen, um Ihnen schnellstmöglich wieder einen unkomplizierten Zugang zur freien Enzyklopädie Wikipedia zu bieten. Bitte haben Sie dafür Verständnis, dass wir aus rechtlichen Gründen bis auf Weiteres keine weiteren Stellungnahmen in dieser Sache abgeben werden. "

read more on the wikipedia entry here - Boris floricic hacker

the german wikipedia.de reads as follows due to court rules

"Liebe Freunde Freien Wissens,

durch eine vor dem Amtsgericht Berlin-Charlottenburg am 17. Januar 2006 erwirkte einstweilige Verfügung wurde dem Verein Wikimedia Deutschland – Gesellschaft zur Förderung Freien Wissens e.V. untersagt, von dieser Domain auf die deutschsprachige Ausgabe der freien Enzyklopädie Wikipedia (wikipedia.org) weiterzuleiten.

Wir lassen derzeit durch unsere Rechtsanwälte alle möglichen Schritte prüfen, um Ihnen schnellstmöglich wieder einen unkomplizierten Zugang zur freien Enzyklopädie Wikipedia zu bieten. Bitte haben Sie dafür Verständnis, dass wir aus rechtlichen Gründen bis auf Weiteres keine weiteren Stellungnahmen in dieser Sache abgeben werden. "

askjeeves releases cache dates - when will MSN and Yahoo add cache dates to their cache,

Search Engine Watch blog reports ask jeeves adding cache dates to sites cache in their results, This is a bold improvement, Yahoo and MSN still didnt bring this facility, Hope they will follow soon,

Google is the first search engine to introduce option,

Google is the first search engine to introduce option,

Google talk now open generation - the seo blog news,

Google talk, google's mservice now offers open generation a new way to communicate with your friends using different IDs,

https://googleblog.blogspot.com/2006/01/open-federation-for-google-talk.html

As google says you are allowed to interact with users with any ID,

on the help page

"We currently support open federation with any service provider that supports the industry standard XMPP protocol. This includes Earthlink, Gizmo Project, Tiscali, Netease, Chikka, MediaRing, and thousands of other ISPs, universities, corporations and individual users. "

What google says

"We've just announced open federation for the Google Talk service. What does that mean, you might be wondering. No, it has nothing to do with Star Trek. "Open federation" is technical jargon for when people on different services can talk to each other. For example, email is a federated system. You might have a .edu address and I have a Gmail address, but you and I can still exchange email. The same for the phone: there's nothing that prevents singular users from talking to Sprint users."

https://googleblog.blogspot.com/2006/01/open-federation-for-google-talk.html

As google says you are allowed to interact with users with any ID,

on the help page

"We currently support open federation with any service provider that supports the industry standard XMPP protocol. This includes Earthlink, Gizmo Project, Tiscali, Netease, Chikka, MediaRing, and thousands of other ISPs, universities, corporations and individual users. "

What google says

"We've just announced open federation for the Google Talk service. What does that mean, you might be wondering. No, it has nothing to do with Star Trek. "Open federation" is technical jargon for when people on different services can talk to each other. For example, email is a federated system. You might have a .edu address and I have a Gmail address, but you and I can still exchange email. The same for the phone: there's nothing that prevents singular users from talking to Sprint users."

Some sites were reporting MSN was showing weird search behavior for current seo contest,

Lots of blogs are monitoring the ongoing SEO contest, Some blogs report MSN is showing weird behavior for the search http://search.msn.com/results.aspx?q=V7ndotcom+elursrebmem&FORM=QBRE ,

First many thought MSN is filtering out results for this keywords, Now it revealed its not the case, MSN search looks brilliant than what we think of, It seems msn search first try to understand the meaning of the words, Later if it cant find the words in dictionary, it adds the words to adult search keywords,

Now the search V7ndotcom elursrebmem reveals 41,000 results but we have the following note below the search bar,

Web ResultsPage 1 of 41,216 results containing V7ndotcom elursrebmem (0.06 seconds) (with SafeSearch: Moderate)

You can see the mention safesearch moderate, Usually this gets displayed it adult related keywords are searched,

So is MSN so concerned about po*n in its results, if its true MSN looks great,

First many thought MSN is filtering out results for this keywords, Now it revealed its not the case, MSN search looks brilliant than what we think of, It seems msn search first try to understand the meaning of the words, Later if it cant find the words in dictionary, it adds the words to adult search keywords,

Now the search V7ndotcom elursrebmem reveals 41,000 results but we have the following note below the search bar,

Web ResultsPage 1 of 41,216 results containing V7ndotcom elursrebmem (0.06 seconds) (with SafeSearch: Moderate)

You can see the mention safesearch moderate, Usually this gets displayed it adult related keywords are searched,

So is MSN so concerned about po*n in its results, if its true MSN looks great,

Million Dollar Homepage back after a severe and malicious DDOS attack,

We reported in our previous posting that million dollar homepage is suffering from ddos attack, Now they are back online,

this is what Alex says in his blog

"I can confirm that MillionDollarHomepage.com has been subjected to a Distributed Denial of Service (DDoS) attack by malicious hackers who have caused the site to be extremely slow loading or completely unavailable since last Thursday, 12th January 2006.

I can also confirm that a demand for a substantial amount of money was made which makes this a criminal act of extorsion. The FBI are investigating and I'm currently working closely with my hosting company, Sitelutions, to bring the site back online as soon as possible. More news soon.

"

this is what Alex says in his blog

"I can confirm that MillionDollarHomepage.com has been subjected to a Distributed Denial of Service (DDoS) attack by malicious hackers who have caused the site to be extremely slow loading or completely unavailable since last Thursday, 12th January 2006.

I can also confirm that a demand for a substantial amount of money was made which makes this a criminal act of extorsion. The FBI are investigating and I'm currently working closely with my hosting company, Sitelutions, to bring the site back online as soon as possible. More news soon.

"

Million Dollar Homepage down for past 2 days, Reports says its because of a major DDOS attack launched against the site,

The Million Dollar Homepage which has been reported to run for 5 years is now down for a considerably long time, Most reports say the site was down because of a major DDOS ( distributed denial of service ) attack against the site,

Infoworld reports

"The wildly successful pixel-powered Web page of a British university student is coming under increasingly intense DDOS (distributed denial of service) attacks trying to knock down the profitable brainstorm

The site is hosted on a server in Ashburn, Virginia, in a data center run by Equinix, where InfoRelay has much of its hardware, Weiss said. The high bandwidth use didn't cause problems for InfoRelay, as the company has a multigigabit network and provides bandwidth for a major search engine, Weiss said.

But The Million Dollar HomePage attracted malicious attention, coming under DDOS fire late Tuesday night and early Wednesday morning, he said.

Officials from InfoRelay met to figure out what they could do to stem the attacks within the constraints of Tew's service package, Weiss said. Tew wasn't on an enterprise-level deal that often includes advanced hardware, from vendors such as Cisco Systems (Profile, Products, Articles), used to prevent the effects of DDOS attacks, Weiss said.

Administrators implemented several proprietary internal techniques to slow and alleviate the effects, he said. "We sort of volunteered our time to do what we could," Weiss said.

The attacks are coming from computers worldwide, including the U.S., Europe and Asia, Weiss said. The attacks could be the work of a botnet -- a network of computers illegally commandeered for sending spam and DDOS attacks.

"

Sitelutions.com their webhost says the site was burning 200 megabits per second of internet bandwidth ( Oops )

"We are honored that Alex selected us to host his site. With so much worldwide coverage, Alex needed a host that could support [the site's intense bandwidth requirements," said Paul Singh, Assistant Director of Operations at InfoRelay Online Systems, Inc.

Sitelutions has hosted The Million Dollar Homepage since September, 23, 2005. At that time, Mr. Tew's site had grossed approximately $108,000. Since Sitelutions has hosted the site, Mr. Tew's site has received up to 16 million hits per day, 500,000 unique visitors per day, and has utilized up to 200 megabits per second of Internet bandwidth."

So when will the site be online?

Infoworld reports

"The wildly successful pixel-powered Web page of a British university student is coming under increasingly intense DDOS (distributed denial of service) attacks trying to knock down the profitable brainstorm

The site is hosted on a server in Ashburn, Virginia, in a data center run by Equinix, where InfoRelay has much of its hardware, Weiss said. The high bandwidth use didn't cause problems for InfoRelay, as the company has a multigigabit network and provides bandwidth for a major search engine, Weiss said.

But The Million Dollar HomePage attracted malicious attention, coming under DDOS fire late Tuesday night and early Wednesday morning, he said.

Officials from InfoRelay met to figure out what they could do to stem the attacks within the constraints of Tew's service package, Weiss said. Tew wasn't on an enterprise-level deal that often includes advanced hardware, from vendors such as Cisco Systems (Profile, Products, Articles), used to prevent the effects of DDOS attacks, Weiss said.

Administrators implemented several proprietary internal techniques to slow and alleviate the effects, he said. "We sort of volunteered our time to do what we could," Weiss said.

The attacks are coming from computers worldwide, including the U.S., Europe and Asia, Weiss said. The attacks could be the work of a botnet -- a network of computers illegally commandeered for sending spam and DDOS attacks.

"

Sitelutions.com their webhost says the site was burning 200 megabits per second of internet bandwidth ( Oops )

"We are honored that Alex selected us to host his site. With so much worldwide coverage, Alex needed a host that could support [the site's intense bandwidth requirements," said Paul Singh, Assistant Director of Operations at InfoRelay Online Systems, Inc.

Sitelutions has hosted The Million Dollar Homepage since September, 23, 2005. At that time, Mr. Tew's site had grossed approximately $108,000. Since Sitelutions has hosted the site, Mr. Tew's site has received up to 16 million hits per day, 500,000 unique visitors per day, and has utilized up to 200 megabits per second of Internet bandwidth."

So when will the site be online?

sketchy testimonial of a seo company - fake seo testimonail,

We got a link exchange request from a new seo company, when we checked their site we saw something very funny, the testimonial which is listed on every page of their site is totally fake,

It reads,

"My experience with Guide SEO has been exceptional. Our website, www.xyz.com moved from virtually no page ranking and listing on the search engines to being in the top ten in many of our most important keywords. Daily traffic to the site has increased eightfold in just a few months. My experience with Guide SEO has been exceptional. I highly recommend the group." Salmon.Mc Donald, President, e-learn, inc.

optimized xyz.com>?? look at that, xyz consulting owns xyz.com and they don't do any seo,

e-learn, INC??? who owns e-learn inc? it belongs to passged.com and definitely salmon.Mc.donald is not the president, So how many seo companies have sketchy testimonials???

Very interesting.

Screen shot,

It reads,

"My experience with Guide SEO has been exceptional. Our website, www.xyz.com moved from virtually no page ranking and listing on the search engines to being in the top ten in many of our most important keywords. Daily traffic to the site has increased eightfold in just a few months. My experience with Guide SEO has been exceptional. I highly recommend the group." Salmon.Mc Donald, President, e-learn, inc.

optimized xyz.com>?? look at that, xyz consulting owns xyz.com and they don't do any seo,

e-learn, INC??? who owns e-learn inc? it belongs to passged.com and definitely salmon.Mc.donald is not the president, So how many seo companies have sketchy testimonials???

Very interesting.

Screen shot,

v7n seo contest - kicks off - keyword v7ndotcom elursrebmem to be ranked by may 15th 2006 for a prize money of 4000$,

v7n.com seo contest has started, we can see many webmasters and SEOs directly getting involved in this contest, we will be actively monitoring this contest to bring the latest updates and improvements,

v7ndotcom elursrebmem is the keyword phrase that needs to be ranked by may 15th 2006, The prize money is 4000$,

First prize also includes an apple IPOD,

The rules for the competition are as follows:1. In order to win the first prize, you must place first in Google (organic rankings) for the search term on May 15, 2006, noon, Pacific standard time. 2. Prizes for 2nd place through 5th place will be awarded based on web page placing in the corresponding positions in Google on May 15, 2006, noon, Pacific standard time. 3. For the purpose of this competition, indented listings in the SERPs will not be counted.4. In order to qualify for the prize, a web page must include one of the following:a. A link back to the V7N home page. The link can be in any manner you wish, any anchor text you wish, with nofollow, without nofollow, JavaScript, cloaked or fried up and served with potatoes. b. One of these Official V7n SEO Contest banners:

The banners may link to V7N, or not link to V7N. Linking the banner to any domain other than v7n will disqualify the contestant. Just to make this very clear, the banner may be unlinked. You do not need to link the banner graphic to v7n. For those who do not speak English: you do not need to link el-banner-o to v7n-o.c. The following text:We support v7n.com Please remember, in order for the web page to be compliant, it must contain at least one of the elements listed above. If datacenters show different results on the 15 of May, the winner will be declared solely at the discretion of V7N. If there are any disputes as to the validity of the winner declaration, V7N will be the sole arbiter.

Good luck everyone with the SEO contest v7ndotcom elursrebmem , wish everyone all the best,

Good day,

SEO Blog Team,

v7ndotcom elursrebmem is the keyword phrase that needs to be ranked by may 15th 2006, The prize money is 4000$,

First prize also includes an apple IPOD,

The rules for the competition are as follows:1. In order to win the first prize, you must place first in Google (organic rankings) for the search term on May 15, 2006, noon, Pacific standard time. 2. Prizes for 2nd place through 5th place will be awarded based on web page placing in the corresponding positions in Google on May 15, 2006, noon, Pacific standard time. 3. For the purpose of this competition, indented listings in the SERPs will not be counted.4. In order to qualify for the prize, a web page must include one of the following:a. A link back to the V7N home page. The link can be in any manner you wish, any anchor text you wish, with nofollow, without nofollow, JavaScript, cloaked or fried up and served with potatoes. b. One of these Official V7n SEO Contest banners:

The banners may link to V7N, or not link to V7N. Linking the banner to any domain other than v7n will disqualify the contestant. Just to make this very clear, the banner may be unlinked. You do not need to link the banner graphic to v7n. For those who do not speak English: you do not need to link el-banner-o to v7n-o.c. The following text:We support v7n.com Please remember, in order for the web page to be compliant, it must contain at least one of the elements listed above. If datacenters show different results on the 15 of May, the winner will be declared solely at the discretion of V7N. If there are any disputes as to the validity of the winner declaration, V7N will be the sole arbiter.

Good luck everyone with the SEO contest v7ndotcom elursrebmem , wish everyone all the best,

Good day,

SEO Blog Team,

Million Dollar Homepage owner Alex is a new millioniare

Alex of million dollar homepage has sold out all his pixels, Alex came out with a great concept milliondollarhomepage.com , he sold a million pixels on his homepage at 1$ per pixel, He sold out his final pixels at ebay auction for more than 38,000$,

He came out with one of the greatest concepts in the history of internet, He has featured BBC, CNN and other top news sites,

In SEO its all about natural linking and doing something unique to make people link to, Alex did this and we hope some else does something more interesting, we are willing to hear from our readers any good suggestions which can bring in lot of natural links,

Care to share??????

He came out with one of the greatest concepts in the history of internet, He has featured BBC, CNN and other top news sites,

In SEO its all about natural linking and doing something unique to make people link to, Alex did this and we hope some else does something more interesting, we are willing to hear from our readers any good suggestions which can bring in lot of natural links,

Care to share??????

Is triangular linking / 3 way linking helpful for a site? Is three way link exchange helpful?

3 way linking is not a great strategy, We at SEG dont endorse 3 way link exchange, It is not an healthy way to gain links, Most of the 3 way link exchange requests we get, we see people proposing back link from some spam directory and we have to give link from the main legitimate site, that is pretty unfair,

Plus if doesnt work properly, We recommend no one should do 3 way linkings,

Plus if doesnt work properly, We recommend no one should do 3 way linkings,

Yahoo site explorer - A nice friend for SEO companies,

Yahoo site explorer is a tool which helps to search a sites backlinks, pages links in yahoo search etc, It gives the data in a more simple form than the normal search, Also it is very quick and is very helpful for major research,

The export feature of yahoo's site explorer is very helpful in search engine optimization, you can do a linkdomain: search and just export all the data to an excel sheet,

Yahoo's approach is just opposite to google's approach,

Google just don't show proper backlinks for a site, Also their arrangement of site: search is not logical a tall, apart from homepage they don't arrange all other pages properly,

Yahoo site: arrangement is more logical, it is based on the quality of each and every page listed algorithmically,

Hope continues this great service for a longer time,

The export feature of yahoo's site explorer is very helpful in search engine optimization, you can do a linkdomain: search and just export all the data to an excel sheet,

Yahoo's approach is just opposite to google's approach,

Google just don't show proper backlinks for a site, Also their arrangement of site: search is not logical a tall, apart from homepage they don't arrange all other pages properly,

Yahoo site: arrangement is more logical, it is based on the quality of each and every page listed algorithmically,

Hope continues this great service for a longer time,

Pages falling out of MSN search for No reason, - webmasterworld members report fall of pages in MSN results

webmasterworld.com members report fall of pages from MSN's index, some of them notice their homepages dropping, some notice that their pages drop by a trend,

Most notice a huge flux with MSN results after christmas, Is MSN onto something, lets wait and see,

Rich of webmasterworld says,

"Pages are falling off for no reason. On using the site command it returns 650 pages which is less than 1% of the sites content.

I noticed that some pages return the page title with the following in the description field rather than the meta description on the page:-

"To get the most out of the XXXX site please either enable Javascript or download a newer version of your favourite browser - Mircosoft Explorer Mozilla Firefox Alternatively please follow "

On watching the recent video clip of the two msn guys talking about the search engine learning from itself i do wonder if the have a major problem with this search facility.

I would say they are trying to be smart by reading a sites pages in a different browser and it clearly doesn't work - rather than trying to be cleaver they should try and get the basic search right first - such as deep indexing of websites for a start

In this example msn clearly does not cash all of the sites pages despite the site being an established site and authority with thousands of backlinks nor does the bot fully index the site when it visits.

On the face of it i would say that the site has triggered some sort of filter and that's why its a)not deep spidering the site and b) not indexing the pages and c) removing pages it already has in its index - whats causing this i am clueless of!

Currently based on other sites we work on i would say that if you knock together a thin site low on content, with keyword domain name you will rank top for that keyword very easily. In one example a site with one page but loads of links to it ranks high in an extremely sought after sector.

All in all, its still early days for the search but i think its got serious problems and issues that need fixing"

More here, webmasterworld.com/forum97/716.htm

Most notice a huge flux with MSN results after christmas, Is MSN onto something, lets wait and see,

Rich of webmasterworld says,

"Pages are falling off for no reason. On using the site command it returns 650 pages which is less than 1% of the sites content.

I noticed that some pages return the page title with the following in the description field rather than the meta description on the page:-

"To get the most out of the XXXX site please either enable Javascript or download a newer version of your favourite browser - Mircosoft Explorer Mozilla Firefox Alternatively please follow "

On watching the recent video clip of the two msn guys talking about the search engine learning from itself i do wonder if the have a major problem with this search facility.

I would say they are trying to be smart by reading a sites pages in a different browser and it clearly doesn't work - rather than trying to be cleaver they should try and get the basic search right first - such as deep indexing of websites for a start

In this example msn clearly does not cash all of the sites pages despite the site being an established site and authority with thousands of backlinks nor does the bot fully index the site when it visits.

On the face of it i would say that the site has triggered some sort of filter and that's why its a)not deep spidering the site and b) not indexing the pages and c) removing pages it already has in its index - whats causing this i am clueless of!

Currently based on other sites we work on i would say that if you knock together a thin site low on content, with keyword domain name you will rank top for that keyword very easily. In one example a site with one page but loads of links to it ranks high in an extremely sought after sector.

All in all, its still early days for the search but i think its got serious problems and issues that need fixing"

More here, webmasterworld.com/forum97/716.htm

The amazing Google Proxy - Google's proxy helps safe surfing of the web,

its Amazing to see Google offering proxy services, Though this was a bit old news I just heard of it, it offers safe browsing, though it doesnt hide the IP but still it prevents system from potential viruses, spywares, trojans, adware, malware, scumwares etc,

https://www.google.com/gwt/n

SEO blog Team,

https://www.google.com/gwt/n

SEO blog Team,

Del.icio.ous - A great site to get tagged - massive traffic flow experienced,

Del.icio.ous is a great site, Recently it was acquired by yahoo, it is a bookmark site where people just bookmark when they like a page or a site,

del.icio.us/popular If your site gets into the popular listing of del.icio.us you can expect massive traffic, Plus many other sites just pick up the story, To get into del.icio.us/popular many people around the world have to bookmark it at the same time,

del.icio.us/popular If your site gets into the popular listing of del.icio.us you can expect massive traffic, Plus many other sites just pick up the story, To get into del.icio.us/popular many people around the world have to bookmark it at the same time,

list of TLD ( top level domain extension codes

Here is a list of TLD codes, These extensions shows which country each extension belongs, It was compiled by the ICANN

ICANN is Internet Corporation for Assigned Names and Numbers

IANA - Internet Assigned Numbers Authority

ac – Ascension Island

.ad – Andorra

.ae – United Arab Emirates

.af – Afghanistan

.ag – Antigua and Barbuda

.ai – Anguilla

.al – Albania

.am – Armenia

.an – Netherlands Antilles

.ao – Angola

.aq – Antarctica

.ar – Argentina

.as – American Samoa

.at – Austria

.au – Australia

.aw – Aruba

.az – Azerbaijan

.ax – Aland Islands

.ba – Bosnia and Herzegovina

.bb – Barbados

.bd – Bangladesh

.be – Belgium

.bf – Burkina Faso

.bg – Bulgaria

.bh – Bahrain

.bi – Burundi

.bj – Benin

.bm – Bermuda

.bn – Brunei Darussalam

.bo – Bolivia

.br – Brazil

.bs – Bahamas

.bt – Bhutan

.bv – Bouvet Island

.bw – Botswana

.by – Belarus

.bz – Belize

.ca – Canada

.cc – Cocos (Keeling) Islands

.cd – Congo, The Democratic Republic of the

.cf – Central African Republic

.cg – Congo, Republic of

.ch – Switzerland

.ci – Cote d'Ivoire

.ck – Cook Islands

.cl – Chile

.cm – Cameroon

.cn – China

.co – Colombia

.cr – Costa Rica

.cs – Serbia and Montenegro

.cu – Cuba

.cv – Cape Verde

.cx – Christmas Island

.cy – Cyprus

.cz – Czech Republic

.de – Germany

.dj – Djibouti

.dk – Denmark

.dm – Dominica

.do – Dominican Republic

.dz – Algeria

.ec – Ecuador

.ee – Estonia

.eg – Egypt

.eh – Western Sahara

.er – Eritrea

.es – Spain

.et – Ethiopia

.eu – European Union

.fi – Finland

.fj – Fiji

.fk – Falkland Islands (Malvinas)

.fm – Micronesia, Federal State of

.fo – Faroe Islands

.fr – France

.ga – Gabon

.gb – United Kingdom

.gd – Grenada

.ge – Georgia

.gf – French Guiana

.gg – Guernsey

.gh – Ghana

.gi – Gibraltar

.gl – Greenland

.gm – Gambia

.gn – Guinea

.gp – Guadeloupe

.gq – Equatorial Guinea

.gr – Greece

.gs – South Georgia and the South Sandwich Islands

.gt – Guatemala

.gu – Guam

.gw – Guinea-Bissau

.gy – Guyana

.hk – Hong Kong

.hm – Heard and McDonald Islands

.hn – Honduras

.hr – Croatia/Hrvatska

.ht – Haiti

.hu – Hungary

.id – Indonesia

.ie – Ireland

.il – Israel

.im – Isle of Man

.in – India

.io – British Indian Ocean Territory

.iq – Iraq

.ir – Iran, Islamic Republic of

.is – Iceland

.it – Italy

.je – Jersey

.jm – Jamaica

.jo – Jordan

.jp – Japan

.ke – Kenya

.kg – Kyrgyzstan

.kh – Cambodia

.ki – Kiribati

.km – Comoros

.kn – Saint Kitts and Nevis

.kp – Korea, Democratic People's Republic

.kr – Korea, Republic of

.kw – Kuwait

.ky – Cayman Islands

.kz – Kazakhstan

.la – Lao People's Democratic Republic

.lb – Lebanon

.lc – Saint Lucia

.li – Liechtenstein

.lk – Sri Lanka

.lr – Liberia

.ls – Lesotho

.lt – Lithuania

.lu – Luxembourg

.lv – Latvia

.ly – Libyan Arab Jamahiriya

.ma – Morocco

.mc – Monaco

.md – Moldova, Republic of

.mg – Madagascar

.mh – Marshall Islands

.mk – Macedonia, The Former Yugoslav Republic of

.ml – Mali

.mm – Myanmar

.mn – Mongolia

.mo – Macau

.mp – Northern Mariana Islands

.mq – Martinique

.mr – Mauritania

.ms – Montserrat

.mt – Malta

.mu – Mauritius

.mv – Maldives

.mw – Malawi

.mx – Mexico

.my – Malaysia

.mz – Mozambique

.na – Namibia

.nc – New Caledonia

.ne – Niger

.nf – Norfolk Island

.ng – Nigeria

.ni – Nicaragua

.nl – Netherlands

.no – Norway

.np – Nepal

.nr – Nauru

.nu – Niue

.nz – New Zealand

.om – Oman

.pa – Panama

.pe – Peru

.pf – French Polynesia

.pg – Papua New Guinea

.ph – Philippines

.pk – Pakistan

.pl – Poland

.pm – Saint Pierre and Miquelon

.pn – Pitcairn Island

.pr – Puerto Rico

.ps – Palestinian Territories

.pt – Portugal

.pw – Palau

.py – Paraguay

.qa – Qatar

.re – Reunion Island

.ro – Romania

.ru – Russian Federation

.rw – Rwanda

.sa – Saudi Arabia

.sb – Solomon Islands

.sc – Seychelles

.sd – Sudan

.se – Sweden

.sg – Singapore

.sh – Saint Helena

.si – Slovenia

.sj – Svalbard and Jan Mayen Islands

.sk – Slovak Republic

.sl – Sierra Leone

.sm – San Marino

.sn – Senegal

.so – Somalia

.sr – Suriname

.st – Sao Tome and Principe

.sv – El Salvador

.sy – Syrian Arab Republic

.sz – Swaziland

.tc – Turks and Caicos Islands

.td – Chad

.tf – French Southern Territories

.tg – Togo

.th – Thailand

.tj – Tajikistan

.tk – Tokelau

.tl – Timor-Leste

.tm – Turkmenistan

.tn – Tunisia

.to – Tonga

.tp – East Timor

.tr – Turkey

.tt – Trinidad and Tobago

.tv – Tuvalu

.tw – Taiwan

.tz – Tanzania

.ua – Ukraine

.ug – Uganda

.uk – United Kingdom

.um – United States Minor Outlying Islands

.us – United States

.uy – Uruguay

.uz – Uzbekistan

.va – Holy See (Vatican City State)

.vc – Saint Vincent and the Grenadines

.ve – Venezuela

.vg – Virgin Islands, British

.vi – Virgin Islands, U.S.

.vn – Vietnam

.vu – Vanuatu

.wf – Wallis and Futuna Islands

.ws – Western Samoa

.ye – Yemen

.yt – Mayotte

.yu – Yugoslavia

.za – South Africa

.zm – Zambia

.zw – Zimbabwe

ICANN is Internet Corporation for Assigned Names and Numbers

IANA - Internet Assigned Numbers Authority

ac – Ascension Island

.ad – Andorra

.ae – United Arab Emirates

.af – Afghanistan

.ag – Antigua and Barbuda

.ai – Anguilla

.al – Albania

.am – Armenia

.an – Netherlands Antilles

.ao – Angola

.aq – Antarctica

.ar – Argentina

.as – American Samoa

.at – Austria

.au – Australia

.aw – Aruba

.az – Azerbaijan

.ax – Aland Islands

.ba – Bosnia and Herzegovina

.bb – Barbados

.bd – Bangladesh

.be – Belgium

.bf – Burkina Faso

.bg – Bulgaria

.bh – Bahrain

.bi – Burundi

.bj – Benin

.bm – Bermuda

.bn – Brunei Darussalam

.bo – Bolivia

.br – Brazil

.bs – Bahamas

.bt – Bhutan

.bv – Bouvet Island

.bw – Botswana

.by – Belarus

.bz – Belize

.ca – Canada

.cc – Cocos (Keeling) Islands

.cd – Congo, The Democratic Republic of the

.cf – Central African Republic

.cg – Congo, Republic of

.ch – Switzerland

.ci – Cote d'Ivoire

.ck – Cook Islands

.cl – Chile

.cm – Cameroon

.cn – China

.co – Colombia

.cr – Costa Rica

.cs – Serbia and Montenegro

.cu – Cuba

.cv – Cape Verde

.cx – Christmas Island

.cy – Cyprus

.cz – Czech Republic

.de – Germany

.dj – Djibouti

.dk – Denmark

.dm – Dominica

.do – Dominican Republic

.dz – Algeria

.ec – Ecuador

.ee – Estonia

.eg – Egypt

.eh – Western Sahara

.er – Eritrea

.es – Spain

.et – Ethiopia

.eu – European Union

.fi – Finland

.fj – Fiji

.fk – Falkland Islands (Malvinas)

.fm – Micronesia, Federal State of

.fo – Faroe Islands

.fr – France

.ga – Gabon

.gb – United Kingdom

.gd – Grenada

.ge – Georgia

.gf – French Guiana

.gg – Guernsey

.gh – Ghana

.gi – Gibraltar

.gl – Greenland

.gm – Gambia

.gn – Guinea

.gp – Guadeloupe

.gq – Equatorial Guinea

.gr – Greece

.gs – South Georgia and the South Sandwich Islands

.gt – Guatemala

.gu – Guam

.gw – Guinea-Bissau

.gy – Guyana

.hk – Hong Kong

.hm – Heard and McDonald Islands

.hn – Honduras

.hr – Croatia/Hrvatska

.ht – Haiti

.hu – Hungary

.id – Indonesia

.ie – Ireland

.il – Israel

.im – Isle of Man

.in – India

.io – British Indian Ocean Territory

.iq – Iraq

.ir – Iran, Islamic Republic of

.is – Iceland

.it – Italy

.je – Jersey

.jm – Jamaica

.jo – Jordan

.jp – Japan

.ke – Kenya

.kg – Kyrgyzstan

.kh – Cambodia

.ki – Kiribati

.km – Comoros

.kn – Saint Kitts and Nevis

.kp – Korea, Democratic People's Republic

.kr – Korea, Republic of

.kw – Kuwait

.ky – Cayman Islands

.kz – Kazakhstan

.la – Lao People's Democratic Republic

.lb – Lebanon

.lc – Saint Lucia

.li – Liechtenstein

.lk – Sri Lanka

.lr – Liberia

.ls – Lesotho

.lt – Lithuania

.lu – Luxembourg

.lv – Latvia

.ly – Libyan Arab Jamahiriya

.ma – Morocco

.mc – Monaco

.md – Moldova, Republic of

.mg – Madagascar

.mh – Marshall Islands

.mk – Macedonia, The Former Yugoslav Republic of

.ml – Mali

.mm – Myanmar

.mn – Mongolia

.mo – Macau

.mp – Northern Mariana Islands

.mq – Martinique

.mr – Mauritania

.ms – Montserrat

.mt – Malta

.mu – Mauritius

.mv – Maldives

.mw – Malawi

.mx – Mexico

.my – Malaysia

.mz – Mozambique

.na – Namibia

.nc – New Caledonia

.ne – Niger

.nf – Norfolk Island

.ng – Nigeria

.ni – Nicaragua

.nl – Netherlands

.no – Norway

.np – Nepal

.nr – Nauru

.nu – Niue

.nz – New Zealand

.om – Oman

.pa – Panama

.pe – Peru

.pf – French Polynesia

.pg – Papua New Guinea

.ph – Philippines

.pk – Pakistan

.pl – Poland

.pm – Saint Pierre and Miquelon

.pn – Pitcairn Island

.pr – Puerto Rico

.ps – Palestinian Territories

.pt – Portugal

.pw – Palau

.py – Paraguay

.qa – Qatar

.re – Reunion Island

.ro – Romania

.ru – Russian Federation

.rw – Rwanda

.sa – Saudi Arabia

.sb – Solomon Islands

.sc – Seychelles

.sd – Sudan

.se – Sweden

.sg – Singapore

.sh – Saint Helena

.si – Slovenia

.sj – Svalbard and Jan Mayen Islands

.sk – Slovak Republic

.sl – Sierra Leone

.sm – San Marino

.sn – Senegal

.so – Somalia

.sr – Suriname

.st – Sao Tome and Principe

.sv – El Salvador

.sy – Syrian Arab Republic

.sz – Swaziland

.tc – Turks and Caicos Islands

.td – Chad

.tf – French Southern Territories

.tg – Togo

.th – Thailand

.tj – Tajikistan

.tk – Tokelau

.tl – Timor-Leste

.tm – Turkmenistan

.tn – Tunisia

.to – Tonga

.tp – East Timor

.tr – Turkey

.tt – Trinidad and Tobago

.tv – Tuvalu

.tw – Taiwan

.tz – Tanzania

.ua – Ukraine

.ug – Uganda

.uk – United Kingdom

.um – United States Minor Outlying Islands

.us – United States

.uy – Uruguay

.uz – Uzbekistan

.va – Holy See (Vatican City State)

.vc – Saint Vincent and the Grenadines

.ve – Venezuela

.vg – Virgin Islands, British

.vi – Virgin Islands, U.S.

.vn – Vietnam

.vu – Vanuatu

.wf – Wallis and Futuna Islands

.ws – Western Samoa

.ye – Yemen

.yt – Mayotte

.yu – Yugoslavia

.za – South Africa

.zm – Zambia

.zw – Zimbabwe

What Causes Sandbox filter? New experiment reveals why sandbox filter exists. Is sandbox a side effect of trust rank?

What Causes Sandbox filter? New experiment reveals why sandbox filter exists. Is sandbox a side effect of trust rank?

There have been numerous discussions on the sandbox filter of google which holds sites for up to 16 months before the site starts ranking well in google results. So what causes this filter? After loosing patience in ranking client sites, we at Search Engine Genie conducted a test across about 15 sites. This test revealed interesting results; following is given a summary of the Anti - Google Sandbox filter experiment.

1. Does the sandbox filter really exist?

Based on our experiment it is understood that, sandbox filter does exist. But sandbox filter doesn't affect all sites; sites on the other hand are artificially linked to rank, through search engine optimization.

2. What causes sandbox filter?

This is an important question asked several times in forums, message boards and blogs. No one was able to give a definite answer for it. Even we had to struggle a bit to figure out what this sandbox is all about. Finally with our experiment using different strategies on about 15 sites we were able to find the cause of the sandbox filter. Our experiments prove that about 90% of theories circulating around in forums and other articles are wrong. Sandbox filter is caused purely by links and nothing else. It is just the abnormal growth of links in google algorithm's eyes which results in a site being placed in sandbox.

As we all know, internet is based solely on natural linking. Search engines and their ranking algorithms are the main cause for artificial linking. Now search engines like google woke up to the occasion and are combating link spam with sophisticated algorithms like the sandbox filter. Sandbox is a natural phenomenon affecting sites using any sort of SEO type methods to gain links. Next topic explains it. Sandbox filter relies on trust rank to identify quality of links not PageRank as it used before.

Trust rank is a new algorithm active with google. The name trust rank is common for Google, Yahoo! and other search engines. So we can use the word trusted links. Trusted links are links which are hand edited for approval, algorithmically given maximum credit to vote for other sites like links from reputed sites, links from authority of the industry like the .gov sites etc. Google's new algorithm sees what type of trusted links a site has, especially if the site is new. If a site starts off with SEO type links than naturally gained trusted links, the site will be placed in sandbox for a period of up to 1 year or even more.

3. What factors / methods lead to sandbox filter?

All types of SEO type link building will lead to sandbox filter.

a. Reciprocal link building:-

Reciprocal link building is one important method which will lead to definite sandbox / aging filter. When a site starts off with reciprocal link building, definitely their site will be sandboxed. It is because first of the reciprocal link building is not the way to build trusted links. Sites which are trusted / hand edited for approval do not have to trade links with other sites, they would rather voluntarily link out to other sites. No one can force them to link out or trade with them for a link. Most of the sites involved in reciprocal link building are very weak themselves. So if a site is involved in reciprocal link building they are not going to get trusted links. So if a new site grows with untrusted links they will be placed in the aging filter. We don't blame reciprocal link building, in fact we do it all the time for our clients, but reciprocal link building by itself, doesn't add any value to the end users, Plus reciprocal link building is built purely to manipulate Search engine result pages. So Google is not the one to be blamed to come out with such an amazing algorithm which can fight aggressive link building so effectively. For a new site we don't recommend reciprocal link building immediately, first build a trust for your site then do reciprocal link building, there are no issues that time. So how do you know you have built trust with google's algorithm? It is proved by ranking. If your site is ranking well for competitive and non competitive terms definitely you can assume that you are not in sandbox any more. We are talking about a minimum of 1 month ranking, not a day or week ranking for good terms.

b. Buying links: -

This is another most important method which will definitely lead to severe sandbox filter. Our experiment proved buying links for new sites will hurt the site badly when it comes to Google. When you start a site don't immediately go and buy lot of links. Buying links are not the best way to gain trusted links. Most of the sites were you buy links are monitored by Google actively. Even if you buy link from a great site in your field still it won't be considered a great trusted link. Especially site wide links (links which are placed throughout a site) are very dangerous for a new site this type of links will definitely delay the ranking of a site to a great extent. Even if you happen to buy link from great sites make sure you just get link from only one page, Also make sure that link is not placed in footer, it should be placed somewhere inside the content to make it look more natural. It is not worth buying links to for new sites. Give time for the site to grow up with naturally gained backlinks.

c. Directory submission: -

Directory submission has proved worthless when it comes to avoiding sandbox, though directory submissions don't directly cause sandbox but those links will definitely affect the reputation of a site especially when the site is new. As discussed before it is important to gain trusted links when the site is new. But when you do directory submissions as the first step to building links we recommend avoiding it because when search engines see those links, they will place your site into the aging filter. Most of the directories are newbie and start up directories. We can name just 4 or 5 directories which are trustworthy to gain. Ask yourself whether you will go to a directory to find relevant sites today? I am afraid no, Directories are a thing of the past and people use search engines to find all information. That is why search engines don't prefer to list directories in their listings, 2 directories which are an exception are the dmoz.org and the yahoo directory.