Search Engine Optimization

Search Engine Optimization and English spell

Recently I was considering keywords used by the visitors to search my website and thought of discussing several issues related to spelling with other people.

I found a lot of people coming to my website are not using the correct spellings while searching in the search engines. It is fact that a huge number of people who use net through out the world do not have good knowledge in English. You may find lot of people of India, China, France, Germany, Russia and several other countries do lack in using correct English spellings.

One more problem is different spellings used by both UK English and International English. While web masters optimize their websites they pick the most commonly used terms and phrases with respect to their websites and they both follow the UK English or International English and optimize the sites accordingly. But for example if you are following UK English, your site may not come up on top ranking for that corresponding International English keyword. Some common examples are Organization and Organization, color and color, Optimization and Optimization etc.

I found many webmasters go after the following techniques to avoid the above problems:-

1. Use the alternative spelling of the keywords in the meta tags.

2. Use all probable variants and misspelled words in the main body.

3. Some people facade the misspelled words that they use the same color of the text as their page color therefore not visible to human but can crawled by search engine bots.

But I feel all the above tricks are no longer useful as Google don’t give much importance to meta tags now a day, no one will like putting misspelled words in the main body and the last one is strongly not suggested as many search engines don’t like this approach and may keep out your website from their index.

So what is the good Approach?

I recommend the following approaches to address the issue:-

1. Use the misspelled words in the Alt tags of your images, because many search engines index the alt tags.

2. Off Page Factors, as doing link building for your website, you could intentionally use the wrong or misspelled words in the anchor text and it would solve your problem to a great extent.

Obtain all your pages indexed in search engines

We have already talked about unique contents, and also about methods to get it. Now we will speak about getting your websites each page indexed in search engines. So, what is the way of getting your entire website indexed in Google?

Before discussing that, how you will realize a website is indexed or not. Google tool bar gives a facility to verify the cashed pages of a website, and that is very useful. You just check out that a specific web page is cashed by Google or not, if not then the particular page or website is not in Google index.

So how to get all your pages indexed. First try to make your website static. A static page with an .html or a .php extension get’s indexed in any of the search engines faster than the URL’s with =, ?, & and id etc characters. Though lots of search engines indexed dynamic pages also, the importance of static page is more than dynamic pages and these static pages do well in the search engines, search results as well. You could also learn about the mod_rewrite function of apache to know about it more.

The good way to index all your pages to search engines is to have a site map which is a collection of your pages. A site map is advised for larger websites, where the amounts of pages are big. There are many methods to make a site map. You could simply build a page in your website and add all your links there. Then put a link to that page in the base of your home page as site map and then link it to the page by all your links.

Here I am talking of some great site map creating websites. There are tools in the net, most of them are free and these tools will generate site maps for your website. The software tool would find all the web pages, in your website. It would list all your pages in a case and you have your site map ready. For improved indexing there is also an xml site map choice, as the search bots reads xml easily, so you could upload an xml site map if you like. Here is the link to the websites http://gsitecrawler.com and one more http://xml-sitemaps.com/. There could be thousands of such websites, but it’s better to utilize these. This will aid you to make proper site maps and Google will index your site fast.

Unique Site Content to Drive Traffic

This is the important reason why a website does well on any search engines. There are numerous methods to bring a search engines crawler to your site. But if your website does not have sufficient unique traffic then, your website or blog may not be indexed. Also if you have duplicate content then your website can be blacklisted on search engines.

So the straightforward answer is, start making content. There are lots of content writing software’s, but avoid using those. If you want your pages to rank well in search engines, then make unique contents. Write yourself a few pages each day. In a month you will have decent pages on your website.

There is no harm in buying some unique content also. There are teams of professionals who write quality content for websites. Also for people who have websites, those of you who runs websites I suggest you to start a blog. Word press and movable types are examples of blog running scripts. Now install it and your blog is ready to use. If you are running a company, you could simply put some of the information and inside news in your blog. If you keep posting a few pages each day, in a few months, the hits to your blog would be more than your websites home page. I say blogs gets more traffic than most websites. You could also use the blogger to post on your site.

If you need to go for free options, and still want unique content, you must try to get a add article feature in your website. After that people will write unique contents for you. And this is a free way to get some unique content for your pages.

Now there is a big question, how to know that a page in your website is unique or not. There is a simple way to know this is, just use this website. It will let you know how many such pages are already available in the internet. From there you could understand that your content is unique or not. Perhaps there is no harm in keeping a little duplicate content in your website. But if it is more, then you would face problems from the search engines.

Quick index in search engines

You can get indexed in search engines in less than 24 hours of your site being online. And this blog was indexed by search engines in a few days. This is due to the feeds and the backlinks that I create from articles and directory submission has a huge role to play. Although the feeds are a main reason why blogs get’s indexed quicker than any websites. So, what I suggest is the entire website must have a blog. You can attempt the word press and moveable type blogs also. A blog will assist your website getting indexed faster; also the crawling rates of search engine crawlers will raise. What you have to do is make a blog, keep adding contents as frequently as possible.

What are feeds and how it would help to bring search engine crawlers to blogs and websites?

Search engine spiders, and crawlers etc, reads and understand xml easily. Feeds are generally rss and atom. The fact is, most people like to read contents and news in there feed readers. For that you have to submit your blog’s feeds in a lot of feed submission websites.

You might have heard about “my google”, “my yahoo” etc. they are your private google and yahoo pages. You could customize your page and add feeds there, so it is displayed in that page, and you could rapidly get to read news from your preferred sources.

Do you ever heard of the blog and ping technique. When someone submits your feed to the google or any other site, in there own my google page, then the google crawler will come to the blog through the feed URL and collects data from there. Now make a blog and ping it, as it is the easiest way to get the crawlers to your website. Also it is the quickest to get your site or blog indexed.

You can get feeds to aid your blogs as well. I will recommend this website for feeds http://www.feedburner.com/. Where you simply create a free account. Log in to it and copy your rss-xml or atom feeds etc and paste it in the homepage of website. First register, after that login, you should be registered to do all this properly. You will get a profile page once you login, from there you can enter your feeds URL. Following that you see your URL listed in your profile and Click on the listed URL, where you will get the control panel for your feeds. Give it more time, then gradually you will know the importance of all this.

User Optimization is the real SEO

You may be confused by telling you that you need to ‘optimize for search engines’ when you don’t need to at all. The core aspect of search engines that we always forget is that, not only they are programmed to act like users, but they were created, and are controlled, by users.

You may be confused by telling you that you need to ‘optimize for search engines’ when you don’t need to at all. The core aspect of search engines that we always forget is that, not only they are programmed to act like users, but they were created, and are controlled, by users.

The similarities between elements that are important to search engines and the elements that are important to users are remarkably obvious.

Let’s have a look

. Titles and the meta content

o Search engines: Uses this one to pick up on what the page is about, and rank it accordingly.

o Users: Uses to pick up on what the page is about, and to decide whether or not to use it.

. Header tags

o Search engines: Use to assess what the main body of text is about, and rank the page accordingly.

o Users: Use them to swiftly see what the main body of the text is about.

. Anchor Text

o Search engines: Use this to realize what the page that is being linked to is about, and to rank that page according to its significance to the anchor text, and the quality of the inbound links.

o Users: Used to understand what the page that is being linked to be about.

. Alt Attributes

o Search engines: Used to understand what the image is about, or what it is representing.

o Users: Uses this to understand what the image is, or what it is representing. Mostly disabled users and people who browse with images turned off.

. Page Copy

o Search engines: Use to set up which search query content is relevant to.

o Users: Use this to set up if the content is related to what they are looking for.

. Strong Tags

o Search engines: Use to pick out important key elements within the copy.

o Users: Use to scrutinize the copy and pick out the very important words.

Many people go crazy when trying to ‘SEO’ their sites, thinking ‘right, here are a few techniques to use, let’s apply them everything’. This unsurprisingly, doesn’t work.

Actual SEO is User Optimization because…

Think on the techniques you’re using to rank higher, and ask yourself would a user appreciate it? If the reply is yes, then the chances are that a search engine will too.

Here is a pair of examples

You put in 35 key phrases to your page copy, and but all in strong tags.

o Users will say: I can’t read it, as it looks terrible.

o Search engines will say: It is spam

If you use clear anchor text throughout your site that is relevant to the page that is being linked to.

o Users find it really easy to navigate.

o Search engines finds anchor text is relevant to the page content.

The jest of it is that if you’re pissing off your users with your ‘optimization’ attempt, then you’re perhaps annoying the agony out of search engines too.

First write your site, code, and your content for your users. It doesn’t issue how many people find you in Google if your content is valueless to the visitors. Make your code and content for users and you’ll see a fine correlation to your rankings too.

SEO don’t mean tricking search engines for ranks.

Search engines always work by looking for the best sites to rank.

SEO just means improving your site until it’s good enough to rank.

The 3 Pillars of SEO, SEM and their Relationship

The 3 Pillars of SEO

SEO getting bad press?

To find Google search volumes for any of the given phrase or term is a notoriously hard task. Google is offering a number of tools to their Ad sense and Ad words customers, and the Google Trends deliver you a graphical representation (without absolute figures) for search volumes that are mapped against press coverage.

Searching Google trends for the term ‘search engine optimization’ will return an interesting result.

Captivatingly, while news reporting seems to have increased, the search volume for ‘search engine optimization’ has been progressively decreasing.

How to maintain your Search Engine Positions in the number One Spot

Apparently there are some exceptions and the reasons are very hard to identify sometimes. However such factors like the number of competition for your keywords and the type and amount of links you get all result how fast you get rankings.

This is what you require to do once you start getting high rankings:

1) Check that your link partners are at rest linking back to your website.

2) Add new articles once in a while, even though the more you add the better as long as they are articles you generate not copied.

3) Add no follow tags on convinced links like terms of conditions, earning disclaimers and privacy policies. There is no requirement for these pages to rank high in search engines.

4) Examine your search engine rankings for your keywords. If a particular keyword looses ranks employment on that keyword. It means get back links so it regains position. Use the keyword in the anchor text of each back link but always alter it a bit now and then. Changing a bit means add a word or two dissimilar with the keyword.

90% of the Rankings Equation Lies in These 4 Factors

I think sometimes, in the field of search marketing we try to make the concept of ranking more difficult than really it is. True – there are many ways to build a link, an endless number of keywords, thousands of exclusive sources to drive traffic all along with analytics, usability, design, conversion testing, etc. but, when it comes to the very precise question of how to rank well for a particular keyword in standard results at the engines, you’re talking about a few big key components.

#1 – Keyword Use & Content Relevance

As I don’t believe in keyword density, no doubt that using your keywords intelligently to create a page that are relevant to the query and the searcher intent is critical to ranking well. You can use the primary keyword phrase as follows:

.In the title tag once or possibly twice if it makes sense.

.one time in H1 header tag of the page

.At least 3X in the body copy on the page

.once in bold

.once in the alt tag of an image

.Once in the URL

.once in the meta description tag

.not in link anchor text on the page itself.

For who’ve done nonsense words testing to see engines respond, you know you can certainly get extra value out of going wild and filling the keywords all over the page, but we’ve also seen that once you reach this level of saturation you’re getting about 95% of the value you can get.

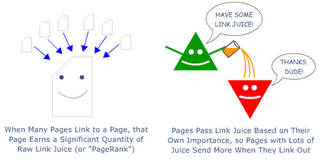

#2 – Raw Link Juice

Some call this PageRank or link weight – it refers to raw quantity of global link popularity to the page. You can raise this with internal links and external links. A page with an exceptional amount of global link power can still rank remarkably well in Google & Yahoo!

Link juice operate on the fundamental principle used in the early PageRank formula – pages on the web have some inherent level of significance and that the link structure of the web can help to point out pages with greater and lesser value. Those pages linked to by many thousands of pages are very important and, when they link to other pages, those pages also have great importance.

Moving this theory to your own pages, you can observe how raw link juices have a large impact on the search engines score their rankings. Growing global link popularity needs both link building and intelligent internal link structure.

#3 – Anchor Text Weight

As search engines evolved in the early 2000’s, they selected up on the usage of anchor text .The anchor text of links is a critical part of the ranking equation, and seen in great quantity, it could overshadow many other ranking factors – you could see many web pages weaker in all the other three factors I explain here ranking primarily because they’ve earned many thousands of links with the exact anchor text of the phrase they’re targeting.

Note that anchor text come from both internal and external links, trying to optimize, think about how you’re linking to from your own pages – using generic links or image links.

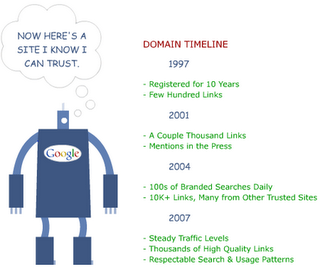

#4 – Domain Authority

This is the most complex factors I described in this post. Basically, it refers to a variety of signals concerning a site that the search engines use to decide legitimacy. Does the field have a history in the engine? Do many people search for and use the domain? Does domain have high quality links pointing to it from other trustworthy sources?

To influence this positively, you need to do is operate your site in a way consistent with the engines’ guidelines. If you desire to earn a lot of trust early in a domain’s life, get many sites that the engines already trust to link to you.

Mistakes which is done by SEO

1. Keyword wadding: Placing the same keyword over and over or using hundred dissimilar spellings or tenses of the identical keywords in your keyword

2. Copy Content: Make definite to have some single and informative content for users on all web pages, it must be connected to your trade. Having the similar content on your different pages of website must be shunned as it may have an adverse outcome on your search engine rankings.

3. Steering and internal linking: Good navigation and inner linking is also matters a lot. Navigation menu should be effortlessly available by users. Make certain that the anchor text linking to pages within your own website is pertinent to the aim page.

4. Anchor content of inbound links: Having a lot of inbound links is scanty but the anchor text pointing to these links is also very significant. The anchor text should be targeted to your major keywords and the web page they tip to should have those keywords.

5. Covering: Cloaking is a method used by some webmasters to show different pages to the search engine spiders than the ones regular visitors see. You should always shun any type of cloaking as it powerfully prohibited by most major search engines now.

6. Over Optimization: Over optimization show that your site has been designed for search engines and not for users. It may drop your search engine rankings as search engines are now able to sense over optimized sites so you must avoid excess optimization.

7. Annoyance: Search engine optimization needs a lot of endurance. You must wait for few months for results after optimizing your website. Have a little patience and you will get your preferred results if you correctly optimized your website using moral SEO techniques.