search engines

Google Leads the table in helping out webmasters

Google has picked up its efforts to help out webmasters for their website related problems. They now guide webmasters to effectively maintain a website so that it gets its deserved rankings. Google gives support in Google groups and other various forums, by employee blogs ( Matt cutt’s blog ) , Official Google blogs ( especially webmaster central blog ) , Google live webmaster chat ( 2 already over ) and now we have Google Trifecta: Webmaster Tools, Analytics, Website Optimizer coming up in July. We are very excited about this and thought we can create some cartoons representing Google’s good work.

1. Matt Cutts , Adam Lasnik and other Googlers are Desperate in impressing webmasters with their active participation in various forums and Blogs.

2. Matt Cutts Speaks in various conferences to make webmaster understand the way Google works.

3. Google is becoming increasingly popular among webmasters and site owners for their effort to become closer to them. Two successful Google Webmaster Live chat is a proven strategy.

4. Matt Cutts Answering Q and A in Webmaster Conference

5. Google has been actively promoting Google Webmaster Central BLog them want webmasters and site owners to read it. Also their Webmaster Tools are becoming increasingly popular.

6. Calling all webmasters to participate and visit Google Webmaster help Groups.

Vijay

Search Engine Genie.

Chat with Semantic Web Expert Ben Adida by Yahoo,

RDFa launched!

Recently Yahoo chat had an interview with a web expert Ben adida. As Yahoo has announced its intentions to support semantic markups. Yahoo has continued to work with the best semantic markup community. Ben is a one among the faculty in Harvard Medical School and at the children’s Hospital Informatics Program well as a research fellow with the Center for Research on Computation and Society with the Harvard School of Engineering and Applied Sciences. He is also the Creative Commons representative to the W3C and chair of the RDF-in-HTML task force, focusing on bridging the semantic and clickable webs.

Ben was questioned as to RDFa has been long in the process of making and the reply was that the delay was for a good because they wanted enough flexibility in the data management which would be useful for current as well as future use.

Y!: What can I do with RDFa?

BA: You can tell the world what various components on your web page mean by marking up things like:

* The title of a photo

* Your name and contact information

* The license under which you’re distributing your latest MP3

* The ingredients of a cooking recipe

* The price of an item

* A gene on which you recently wrote a paper

* … Anything that you want to make more machine-readable

With RDFa, you can reuse existing concepts, e.g. the title and price of an item, no matter what that item is. If there’s a field you need that doesn’t exist, you can create it.

This level of granularity encourages you to mark up your content as fully as possible, while letting applications consume only as much of the data as it needs.

Micro formats, eRDF and AB meta and RDFa all serve the same goal.

The advantage which RDFa provides compared to microformats, eRDF and AB meta are that while Micro formats do possess field conflicts RDFa doesn’t have field conflicts the titles can reused. As concerned with eRDF has much lesser data content than RDFa.

To the critics Ben says that it is a matter of finding the right compromise and he considers that RDF and eRDF have the same level of complexities as far as authors are concerned. It is more difficult to write RDFa than microformats but that is because microformats are limited in scope and microformats are quite costly to use. For few months They are looking forward to assist the publisher’s to produce RDFa tool and the tool builders to parse it correctly.

More information here

Pagerank craze whitebar, Greybar of Greenbar

Martin Buster Good post on Brett’s Link theme pyramid.

Martin Buster Webmasterworld Moderator made an interesting post where he discusses about links using Brett Tabke’s Link theme Pyramid he says

“1. Anchor text should match the page it’s linking to. If the anchor says red widgets, particularly for a page meant to convert for red widgets, it should have the phrase red widgets on the page.

I know some people will say this opens you up to OOP but I think as long as there are variations in the links, then you’re good to go. Because of the natural non-solicited links I’ve received on some sites, I’ve become a believer in the ability of the linking sites relevance to a query being able to transfer over to the linked-to page.

Why would one consider a page about red and blue widgets to be relevant for blue widgets? Looking at it from the point of view of relevance to the query, does it make sense to return a page about red and blue when the user is looking for blue? Looking at it from the point of conversions, if someone is querying for blue doesn’t it make sense to return a page dedicated to blue?

PPC advertisers understand the value of having a landing page that matches the query. PPC advertisers understand the value of an optimized ad for inspiring targeted and converting click-through. Organic SEO should follow suit. A dedicated organic page can utilize a specific title and meta description for the same purpose. This means building specific links to specific pages.

I don’t think it’s adequate for the search user to query babysitting for boys and get a page for babysitting in general. So why build links to a general page when a specific page will not only be more relevant but convert better?

2. Hubs Getting back to Brett’s theme pyramid, imo general anchors should point to general pages. Specific anchors should point to the specific pages. I don’t understand why people are trying to obtain specific anchors to general pages.

Why are hub pages being created that are simply a big page-o-links to specific pages? Hubs are great starting points, imo they should be more than a page of links. I think this is especially critical for e-commerce where high level topics include brands or kinds of products and sub-pages include models or specific manufacturers.

These second level pages can be cultivated to perform for more general terms, but also in conjunction with, for example buy-cycle long tail phrases like reviews, comparison, versus, etc. Take that into account for the link building.

3. Is the home page really the most relevant page of the link? Here is another place where link building is wasted, imo. I think it makes sense to focus on relevance/links to supporting pages that then create a groundswell of relevance back to the home page for the more general terms.

Reviewing affiliate conversions and AdSense earnings, it’s been my experience that specific pages perform better than general home pages. If you’re lucky or by design people will click through to the pages they are looking for. But shouldn’t you be showing those pages to the user first? And don’t you think the search engines want to show those specific pages too? I think this may explain some ranking drops some people are experiencing for home pages that used to rank for multiple terms.

4. Longtail Matching This is where on page SEO comes into play. This refers to geographic and buy-cycle phrases. Building partial matches works, imo. Someone showed me a site that was a leader in specific searches but those pages would perform better if they had the names of cities and provinces on the page. Ranking for Babysiting for Boys is fine, but Babysiting for Boys + (on page) Tampa is better. “

Source: webmasterworld.com/link_development/3676520.htm

matt cutts says widgets are ok as long as its not abused

In a recent interview with Eric, Matt Cutts Google’s Web Spam Head has agreed that widgets are a type of link bait if used in a proper way.

We at Search Engine Genie provide PageRank Button a useful Widget where users don’t need to have Google toolbar to view the PageRank of your page. They can just view the PageRank from the button we provide you. Our PageRank Button users our custom coding to query Google’s Database to query for PageRank of a page and will display it for you on your website. We are in process of developing more widgets especially a widget which will query Google, Yahoo, MSN for Number of pages indexed, number of back links and will display it on your page. It will be released in a week.

Matt Cutts also re-iterated Widgets for the purpose of Spamming the search engines cannot be accepted. Some of them are like hiding links in a Web Counter or linking to any random site for the benefit of pushing the linking page’s rankings etc. Also links in non-embedded tag or no script when using widgets is spam. We at Search Engine Genie never resort to those type of tactics.

We already discussed this before many aggressive SEOs contact word press theme developers and insert their link as a credit back to their site. This is again an aggressive tactic and Matt Cutts has warned not to use such tactics to boost Search Engine Rankings.

You can expect lot more widgets from Search Engine Genie in coming future. Our programmers are working towards it. We want Search Engine Genie to be an useful hub webmasters and site owners.

Good Luck,

Search Engine Genie Team

Losing Viacom Lawsuit might cost 250$ Billion for Google.

Couple of weeks back we reported on the lawsuit by Viacom on Youtube and Google for allowing its copyrighted videos to be posted on Youtube.

Google responded strongly in their counter filing in Court. Google’s filing says “By seeking to make carriers and hosting providers liable for Internet communications, Viacom’s complaint threatens the way hundreds of millions of people legitimately exchange information, news, entertainment, and political and artistic expression,” . We strongly supported Google’s claim.

Doing further research on Viacom’s claim we were able to find some hard core facts. What currently looks like a small lawsuit might burst into a major Nuke explosion if Google ever looses the lawsuit. Viacom claims Youtube has more than 150,000 copyright videos and it claims a Billion dollar for it. So what happens if Google wins this lawsuit i am sure other companies won’t sit quite.

Companies like CBS, NBC will jump in and will claim their own damages like this Google has to faces lots of companies around the world. I am sure just a lost lawsuit in Youtube will cost Google 50 Billion dollars if other companies claim their share.

Google is a search engine which was developed using other web site’s information in their search results. They do have a cache of copyrighted pages crawled on the web so if Youtube is wrong then Google search is wrong too and with all the billions of sites out there Google will go Bankrupt. I estimate Google will need to pay 200 Billion Dollars. Google news also faced a recent major lawsuit from Belgium newspaper group. They want more than 75 million dollars since their news appeared in Google news.

Its Seems Everyone needs a piece of the Google PIE.

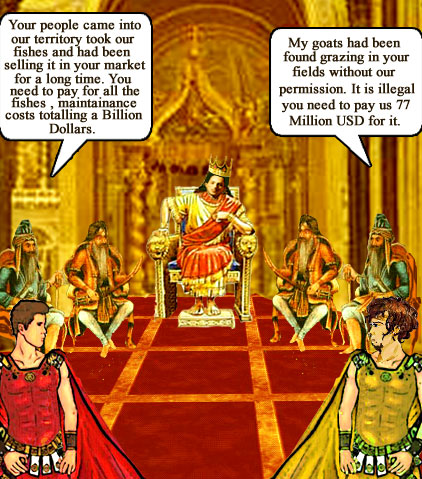

Look the following setup of images i tried to portray the 2 lawsuits.

This image shows Google Kingdom and does it look like Sergey brin on the King’s chair 😉

This is Google country guys fishing in Viacom territory. Is Google responsible for this?

Google country guys selling fishes that are taken from Viacom territory river.

This image criticises the Belgium Newspaper group. Goats from the Belgium Newspaper group territory are grazing in Google’s territory.

Google’s Market share / wealth

Viacom and Belgium Newspaper claim

Vijay

How Google handles Scrapers – Nice information from Google team

Its been a long battle between Google, webmasters and content thieves who scrap information from a website and display it on their website to get traffic. Many Webmasters had been complaining for a long time about this problem. As far as i know Google is already doing a good job with content thieves and scraper sites. Now they have opened up with their inner workings on how they tackle this problem.

We tackle 2 types of dupe content problems one within a site and other with external sites. Dupe content within a site can easily be fixed. I am sure we have full control over it. We can find all potential areas which might create 2 pages of same content and prevent one version from crawling or remove any links to those pages which might be duplicates.

External sites are always a problem since we don’t have any control over it. Google says they are now effectively tracking down potential duplicates and give maximum credit to the Original source and filter out rest of the duplicates.

If you find a site which is ranking above you using your content Google says

- Check if your content is still accessible to our crawlers. You might unintentionally have blocked access to parts of your content in your robots.txt file.

- You can look in your Sitemap file to see if you made changes for the particular content which has been scraped.

- Check if your site is in line with our webmaster guidelines.

For more information read this official Blog posting

Digg effect – Effect of ranking on digg homepage

Digg killed my friends server. Few day’s back one of my friend’s article featured of Digg homepage. Within few minutes his server can’t withstand the massive traffic and was down for 5 hours. Is Digg homepage ranking worth anyone else had that experience?

Please share it here

Chris.

Is Microsoft indirectly going for a Proxy fight?

BLB&G LLB group is taking on Yahoo on behalf of some share holders for not accepting Microsoft bid. this page has some very useful information that’s just revealed.

On February 21, 2008, BLB&G, on behalf of Plaintiffs, filed a Class Action Complaint against Yahoo and its board of directors (the “Board”), alleging that they have acted to thwart a non-coercive takeover bid by Microsoft, which would provide a 62% premium over Yahoo’s pre-offer share price, and have instead approved improper defensive measures and pursued third party deals that would be destructive to shareholder value. Yahoo’s “Just Say No to Microsoft” approach is a result of resentment by the Board, and not any good faith focus on maximizing shareholder value. Microsoft attempted to initiate merger discussions in late 2006 and early 2007, but was rebuffed, supposedly so Yahoo’s management could implement existing strategic plans. None of those initiatives improved Yahoo’s performance. On February 1, 2008, over a year after its initial approach, Microsoft returned, offering to acquire Yahoo for $31 per share, representing a 62% premium above the $19.18 closing price of its stock on January 31, 2008.

Looks like the proxy fight is about to move forward more aggressively. Lets wait and see

SearchMarketing Expo Conference – recap and write up

Barry of Search Engine Roundtable has a very good writeup of the recently concluded Search marketing expo conference organized by the Search engine industry expert Danny Sullivan. Barry also blogs for Search engine land Danny’s official blog. Please read Barry’s write-ups here

My favourite is the tip provided Roger on finding edu domains for links

“Tips in Yahoo Link Commands:

linkdomain:example.com site:.edu “bookmarks”

linkdomain:example.com site:.edu “links”

linkdomain:example.com site:.edu “favorite sites”

linkdomain:example.com site:.edu “your product or service”

More examples:

linkdomain:example.com site:.edu sponsors

linkdomain:example.com site:.edu donors

linkdomain:example.com site:.org sponsors

linkdomain:example.com site:.org benefactors”

Blogroll

Categories

- AI Search & SEO

- author rank

- Authority Trust

- Bing search engine

- blogger

- CDN & Caching.

- Content Strategy

- Core Web Vitals

- Experience SEO

- Fake popularity

- gbp-optimization

- Google Adsense

- Google Business Profile Optimization

- google fault

- google impact

- google Investigation

- google knowledge

- Google panda

- Google penguin

- Google Plus

- Google Search Console

- Google Search Updates

- Google webmaster tools

- google-business-profile

- google-maps-ranking

- Hummingbird algorithm

- infographics

- link building

- Local SEO

- local-seo

- Mattcutts Video Transcript

- Microsoft

- Mobile Performance Optimization

- Mobile SEO

- MSN Live Search

- Negative SEO

- On-Page SEO

- Page Speed Optimization

- pagerank

- Paid links

- Panda and penguin timeline

- Panda Update

- Panda Update #22

- Panda Update 25

- Panda update releases 2012

- Penguin Update

- Performance Optimization

- Sandbox Tool

- search engines

- SEO

- SEO Audits

- SEO Audits & Monitoring

- SEO cartoons comics

- seo predictions

- SEO Recovery & Fixes

- SEO Reporting & Analytics

- seo techniques

- SEO Tips & Strategies

- SEO tools

- SEO Trends 2013

- seo updates

- Server Optimization

- Small Business Marketing

- social bookmarking

- Social Media

- SOPA Act

- Spam

- Technical SEO

- Uncategorized

- User Experience (UX)

- Webmaster News

- website

- Website Security

- Website Speed Optimization

- Yahoo