Is over-optimization bad for a website?

Robert from Charlotte has an interesting question. “Is over optimization bad for a website? Ex. Excessive use of nofollow.”

Very good question! First off if it’s your website, you can use nofollow all you want don’t worry this, there is no penalty for excessive use of nofollow, you are not going to get in trouble because of that. On the other hand over optimization, there is nothing in Google that we have over optimization penalty for. But lot of times over optimization is kind of a euphemism for a little bit spamming. Oh my keyword density is a little high, I’m over optimized for keyword density, often means I repeat my keywords so many times that regular users get annoyed and competitors are like where did this content come from. So there is nothing where we say yeah this has the hallmarks of a SEO inside, but if you have over optimized, often you end up with the site that people don’t necessarily like or that looks junky or skuzzy or scummy or just bad in some way. So it’s not as if we are going to say, we detect signs of seo with this site but certainly you can go over bored and have too many keywords, keyword stuffing or hidden text or that kind of stuff. So if you are worried about that, just come back a little bit, edge back and try to make it better for users. But don’t worry about oh I’ve got too many nofollow tags or anything like that. That won’t get you any sort of penalty.

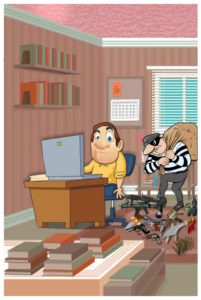

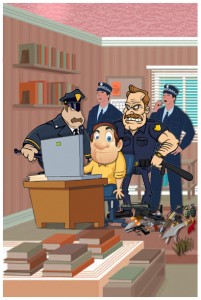

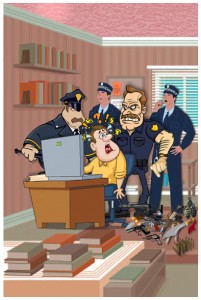

Operation Kill competitor – successful

Google has long claimed no one can hurt a competitor site. We believed Google all these time but we wanted to test it recently. We took a main tail keyword where our client ranks position 12. Our client wanted us to push into top 10 results. So we tried our secret weapon against our competitors. We added a bunch of low quality links from sites deemed low quality by Google. Just added 30 unique links from different sites to each competitor and BOOM within 2 weeks we saw the competitor sites drop 6 to 8 positions. One competitor dropped from position 6 to 14 other dropped position 9 to 17. Well it can be a coincidence but there was no update during the time we tested and those sites were sitting there for about 2 years. So what Google is claiming is truth we don’t know, but we believe we can sabotage a competitor rankings but pushing low quality links. When I say low quality links the links we added are from good pagerank pages and they are strong themselves but come from a negative area.

We wish Google seriously looks into this issue. If you think I am lying we don’t care but it is 100% true we tested and the results are positive. So why disclose a secret weapon? LOL we don’t want to take it as a weapon we just tested we prefer to do SEO search engine friendly way. We tested this to bring awareness to people.

Do you disclose the test results?

Ofcourse NO. We don’t want to keep our client’s rankings and online Business in jeopardy.

What are some best practices for moving to a new CMS?

Here is a fun question from Mani in Delhi. Mani asks, “We are changing a fairly large HTML site to CMS. What are the essentials to keep in mind so that we do not lose our search rankings?”

Very good question! I have seen a lot of people a lot of websites go through a 2 year re-design only to launch with completely new software underlined package, platformed CMS or anything and a completely new HTML layout and suddenly are not what they were expecting to be and then they are stuck. Was it because we changed the layout or was it because we changed the CMS or we changed the URL structure. So one big piece of advice that I would like to give is, try not to launch all of this at once. For example if your CMS means your layout has to change you can mark that up. You can try to make it, if you change your HTML, so that it looks like to look like from your content management system and then make sure that your rankings don’t change. They shouldn’t change very much at all but if you change the whole bunch of stuff on your page that can affect how Google scores that page. So instead of changing the CMS and changing the layout, see if you can change only one at a time. Try not to change the URL structure. Another thing is if you are really worried you can change only one directory that is powered by the CMS at first. So it is something like dipping your food in the water. But it’s just like any other scientific thing, if you are going to change 4 things at once and then your rankings change you don’t know which of the 4 things it was. So if at all possible no. 1 try to change it so that everything from your CMS generates identical HTML and then no. 2 if you are going to go through a big re-design try to put some mark-ups relatively early may be you can do some ab testings, see how it goes with users, see how it goes in terms of search engine rankings. But don’t try to work for 2years without even trying it out on the search engines just to see how it might be scored differently. That said normally if you are changing things around it isn’t a huge difference, it doesn’t make a huge impact, it is possible so do some trials if you can but it ism should be very doable to try to migrate between different content management systems without losing your rankings.

Will I be penalized if my URLs all have the same priority?

AndyPTG from Boston asks, “Will I be penalized for having every file in my XML sitemap listed with the same priority? Google Webmaster Tools give me a warning on that. But at the same time the priority field is optional”

But I definitely don’t think you’ll be penalized. If you give all of those files the same priority then we’ll just try to sort of which ones we think are the most important on our own. So I definitely wouldn’t worry about having the list of priority for every single one. It‘s not like we are going to having some scoring penalty or anything like that. It’s totally fine and totally optional. If you do have some information that you use to put under priority that’s great but it’s not required and it won’t get you into trouble if you do or don’t have it

What’s the preferred way to check for links to my site?

Well, I’ve explained this before the link: operator is accurate; it only shows you a sub sample of your links. So my preferred way would be to log into Google’s Webmaster Tools and we will show you a very exhaustive list of back links, pretty much everything all the back links that we know of in Google’s Webmaster Console. The nice thing is other people can’t spy on that so your competitor can’t look at you back links. You can start to see other back links from other sites like Yahoo Site Explorer, for example, if you type in a URL into Yahoo Site Explorer just in their search box you can explore the back links in that way. So those are the couple of tools that you can use. But it’s not Link: show a wrong link, it’s just that we don’t show all of the links that we know about. So there are a lot of different options if you have to find out more about back links from different websites.

Should I include my logo text using ‘alt’ or CSS?

Richard M from Australia asks, “If you have a company logo on your site, what is the best way to include the text of the logo for SEO purposes? ALT tag, CSS hiding, or does it matter?”

Yes, it does matter its much better use an ALT tag than to use like I’m hiding some CSS, 9000 pixels on the left of the webpage or something like that. That’s what the ALT tag was more or less built for or ALT attribute or whatever you want to say. But yes go ahead and use Alt and that’s the fantastic way to say this is the text that’s in my logo, search engines can read that and use that, I would not hide it using CSS or anything like that when there is a perfectly valid, perfectly simple way to do it. it does the job just fine.

What types of directories are seen as sources of paid links?

We have a question from Fabio Ricotta from Brazil. Fabio asks “Will Google Yahoo! Directory and BOTW as sources of paid links? If no, why is this different from other sites that sell links?”

I’ve answered this question in the past but since enough people are curious to ask, I’ll do the spiel again. Whenever we look into whether a directory is useful to users, we say, what is the value add of that directory? Do they go and find entries on their own or do they only wait for people to come to them. How much do they charge, how much is the editorial service that they are charged. If a directory takes $50 and every single person who ever apply to the directory automatically gets in for that $50. There is not as much editorial oversight as something like that yahoo directory where people do get rejected. So if there is no editorial value adds then that is much closer to the page links. In fact if you look at our webmaster quality guidelines we used to have a guideline that says, submit your site to directories and we gave few examples of directories. And what we found what’s happening was people would get obsessed with that line and finds a lot of directories and there were a lot of people who were like oh well, if people are looking out for directories, I’ll make a directory and so you saw all these fly-by night directories, that would start up and say “I am the page rank 6 directory, you give me $50 and you’ll automatically get an entry in my directory.” And it’s not as if those type of listing or the sort of things that users really value or do a lot of good in our search results so that we want to do a lot of good. So we ended up taking out that mention in our webmaster guidelines so that people don’t get obsessed with the directories and think yes, I have to go find a bunch of different directories to submit my site to. There are some directories that do carry weight; the yahoo directory does a good job of editorial discussion and actually doing a review and rejecting a significant number of entries that are low quality. So the question in your mind whenever you consider a directory is what is the value add, do they high standards? I have a blog post outright that talk about other different factors of the directory and whether we might consider it as real. But those are some of the factors that you should ask yourself about. So by those measures, no, the yahoo directory is not just automatically paid links. Typically paid links are going to be much lower quality and they are going to be much more automatic people will give you whatever rank or text you want all those sorts of things. So there is a difference. At the same time don’t go overboard worrying about submitting your site to every directory. If you make a great site make sure that the people find out about it. Those are some of the things that really makes a difference not that I have to submit my site to atleast 5 directories or atleast 10 directories or anything like that.

What impact does “page bloat” have on Google rankings?

A question from Deepesh in New York. Deepesh asks, “What impact does “page bloat” have on Google rankings? Most of the winners in SEO seem to have very simple pages (very few image HTML-only design) – sometimes to the detriment to the user in a poorly designed page.”

I wouldn’t jump to conclusions. Back in the early days of Google we used to trunk aid data about a 100 kilobytes. So if you’ve had page-bloat back then, I could imagine that your content might got snipped off a half way through and we wouldn’t see all of it. But Google does a much better job of seeing the entire page now; we don’t trunk aid at 100 Kb’s anymore we can deal with a larger page. So I wouldn’t really worry about page-bloat, we tend to do a very good job of finding the content. So if you have extra images don’t worry about that, if you have extra HTML markup don’t worry about that. I think the assumption that only the SEOed pages that don’t have very many images or they have very thin HTML designs are the winners, I’m not sure I’d agree with that, because if you think about it, there are a lot of really good sites and well known brands, they do well and they often have very big pages, they might have flash they might have a lot of images or things like that. So there might be some niches where you might where you paying attention to and looks like only these focused pages with a lot of content do well. But we try to return the best page the most relevant page no matter what the query is. So don’t worry about it to the degree you are going to start making radical changes pruning down content. Go ahead and do what you think is the best for your users the most informative and relative pages that you can make and we’ll try to return that and we do a very good job of handling bloat and finding what the real content is on the real page.

Do dates in URLs determine freshness?

Filipe Santos from New York asks, “Do dates in the URL of blogs or websites help determine freshness of the content or is it largely ignored?”

Well, I think dates in the URL or the content can be largely useful, but people can also try to optimize that and say that they are always 10 mins old. We have our own measures of how fresh pages are, for example: the first time our crawler saw our page. We also look at revisiting the pages, how much the content changes. So I think it’s a good idea to have the URL clearly somewhere on your page that people can find out how old the content is. But I don’t think you necessarily need to do it for Googlebots. So it’s a good usability thing, but Google has its own ways to measure how fresh various content is. You don’t have to worry about having the date in the URL or the content just convince Google its fresh we already do that complications and figure it out for ourselves.

Are CSS-based layouts better than tables for SEO?

Frankly I wouldn’t worry about it. We see tables we see CSS, we have to handle both, so we try to score them well, no matter what kind of layout mechanism you use. Frankly I would use what’s best for you. A lot of people these days tend to like CSS, because it’s easy to change your site, it’s easy to change the layout. Tables kind of have this web1.0 connotation to them. But if you have the vest site, we will try to find it and we’ll try to rank it highly regardless of whether it’s table-based or its CSS based

Blogroll

Categories

- AI Search & SEO

- author rank

- Authority Trust

- Bing search engine

- blogger

- CDN & Caching.

- Content Strategy

- Core Web Vitals

- Experience SEO

- Fake popularity

- gbp-optimization

- Google Adsense

- Google Business Profile Optimization

- google fault

- google impact

- google Investigation

- google knowledge

- Google panda

- Google penguin

- Google Plus

- Google Search Console

- Google Search Updates

- Google webmaster tools

- google-business-profile

- google-maps-ranking

- Hummingbird algorithm

- infographics

- link building

- Local SEO

- local-seo

- Mattcutts Video Transcript

- Microsoft

- Mobile Performance Optimization

- Mobile SEO

- MSN Live Search

- Negative SEO

- On-Page SEO

- Page Speed Optimization

- pagerank

- Paid links

- Panda and penguin timeline

- Panda Update

- Panda Update #22

- Panda Update 25

- Panda update releases 2012

- Penguin Update

- Performance Optimization

- Sandbox Tool

- search engines

- SEO

- SEO Audits

- SEO Audits & Monitoring

- SEO cartoons comics

- seo predictions

- SEO Recovery & Fixes

- SEO Reporting & Analytics

- seo techniques

- SEO Tips & Strategies

- SEO tools

- SEO Trends 2013

- seo updates

- Server Optimization

- Small Business Marketing

- social bookmarking

- Social Media

- SOPA Act

- Spam

- Technical SEO

- Uncategorized

- User Experience (UX)

- Webmaster News

- website

- Website Security

- Website Speed Optimization

- Yahoo